Reality of Serverless: Pros, Costs, Security, and Trade-offs

Table of Contents

Introduction – The Promise and Pitfalls of Serverless Cloud

Serverless computing has quickly evolved from a buzzword to a mainstream solution shaping how modern applications are built and deployed. By removing the need for developers to manage infrastructure, serverless promises cost-efficiency, scalability, and speed. Instead of provisioning entire servers, you only pay for what you use, and your cloud provider handles the scaling automatically. For many startups and enterprises alike, this has opened the doors to building applications faster and with fewer operational headaches.

But as with every technology, the story isn’t all sunshine and savings. Serverless brings along its own unique set of challenges—from vendor lock-in to hidden costs and even potential security blind spots. The very nature of giving up control of the infrastructure that powers your applications comes with trade-offs that developers and businesses can’t ignore. This is where the “promise vs. reality” of serverless begins to reveal itself.

Need Fast Hosting? I Use Hostinger Business

This site runs on the Business Hosting Plan. It handles high traffic, includes NVMe storage, and makes my pages load instantly.

Get Up to 75% Off Hostinger →⚡ 30-Day Money-Back Guarantee

Understanding these realities is crucial because serverless is not just a trend—it’s becoming the default approach for many companies. Yet, before you dive headfirst into migrating everything to serverless, it’s worth unpacking what makes it so attractive and what potential roadblocks could stall your plans.

The Benefits of Serverless Cloud

Serverless cloud is not just a buzzword; it’s a shift in how we approach application design and infrastructure. Developers no longer need to spend sleepless nights worrying about provisioning servers, scaling applications during peak traffic, or paying for unused compute resources. Instead, serverless allows them to focus purely on building features that matter to users.

One of the biggest advantages is cost efficiency. Traditional hosting models often force developers to pay for reserved capacity, even if it sits idle most of the time. With serverless, you pay strictly for execution time and resources consumed. For startups and solo developers, this is game-changing—launching an app no longer requires thousands of dollars in upfront infrastructure costs. If you’ve read our post on Top Passive Income Ideas for Developers in 2025, you’ll know that serverless architecture plays a key role in enabling low-cost, scalable side projects that can earn revenue without high overhead.

Scalability is another powerful benefit. With serverless, if your app suddenly goes viral overnight, the infrastructure automatically scales up to handle millions of requests. Conversely, when traffic drops, so do your costs. This elasticity makes serverless ideal for projects with unpredictable or seasonal demand, much like how developers are leveraging Edge AI in 2025 for smarter and scalable offline solutions.

Finally, serverless simplifies developer workflows. By outsourcing infrastructure complexity to the cloud provider, teams can iterate faster, integrate CI/CD pipelines, and reduce time-to-market. Combined with tools like DevTools as a Service, developers are now working in environments that feel frictionless, allowing them to experiment, test, and deploy more efficiently than ever before.

The Hidden Costs of Serverless Cloud

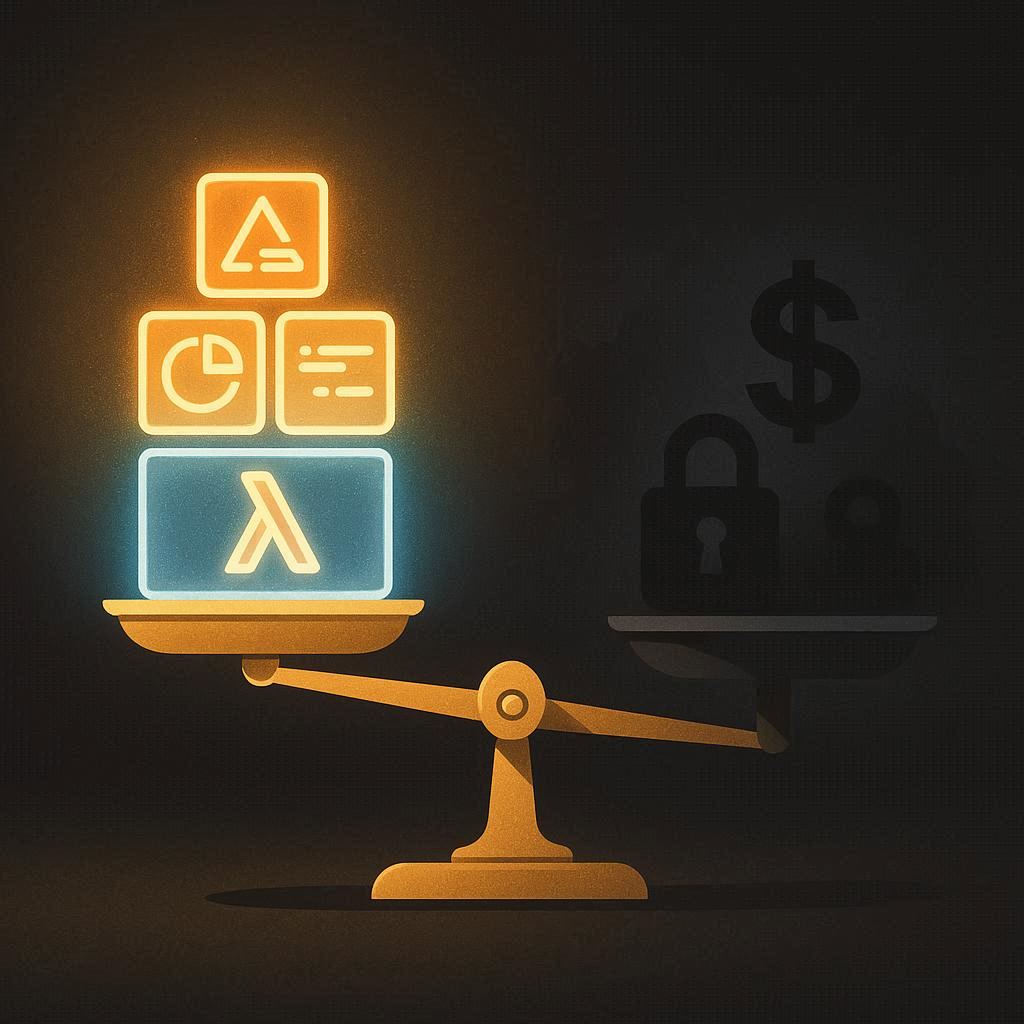

While serverless cloud sounds like the holy grail of modern computing, it’s not without trade-offs. One of the most significant concerns is vendor lock-in. When you build an application on platforms like AWS Lambda, Google Cloud Functions, or Azure Functions, you’re often tied to that provider’s ecosystem. Migrating later can be complex, time-consuming, and expensive. This dependency can create long-term challenges for developers and businesses who want more freedom and control over their stack. We explored a similar issue in our blog on The Dark Side of No-Code Tools, where convenience sometimes comes at the cost of flexibility.

Another hidden cost comes in the form of performance overhead. Serverless functions operate in a stateless environment, meaning every execution spins up independently. This often introduces what’s called a “cold start” delay, where your function takes extra time to initialize before responding. For latency-sensitive applications—like financial transactions, real-time analytics, or gaming—these delays can degrade the user experience. Compare this with traditional setups or edge computing (see our post on The Rise of Edge AI in 2025), where data is processed closer to users for faster response times.

Finally, cost unpredictability can creep up. While serverless is marketed as “pay-as-you-go,” heavy usage can lead to unexpected bills if monitoring isn’t in place. A sudden spike in traffic could result in higher costs than expected, catching small startups off guard. Unlike fixed infrastructure costs, where you know your monthly bill, serverless charges scale with every execution. Developers who aren’t careful with optimization—like inefficient function calls or unnecessary triggers—may quickly find themselves overspending. For more on optimizing performance without overspending, check out our guide on Speeding Up WordPress by 300% Without Expensive Hosting.

Use Cases Where Serverless Truly Shines

Despite its drawbacks, serverless cloud computing isn’t just hype—it’s genuinely transformative in specific scenarios. One of the biggest advantages is how it enables startups and small teams to launch products quickly without massive upfront costs. Instead of spending months setting up servers, configuring load balancers, and managing infrastructure, developers can simply write functions and deploy them. This makes serverless ideal for minimum viable products (MVPs), hackathons, or proof-of-concept projects where speed matters more than deep customization. We highlighted a similar mindset in our article on How to Build a $50k/year Micro-SaaS (While Keeping Your Day Job), where agility and lean setups often determine success.

Another strong use case is event-driven workloads. Serverless excels when tasks are triggered by specific events—such as a user uploading an image, submitting a form, or making a payment. Instead of running a server 24/7, you can design a system where resources only activate when needed. This model is especially popular in e-commerce, real-time notifications, and IoT applications, where workloads are unpredictable. For example, imagine a system that automatically generates thumbnail images whenever a user uploads a photo—serverless functions can handle this seamlessly without wasting idle resources. This complements trends like IoT Integration in 2025, where devices constantly trigger micro-events.

Lastly, serverless shines in scalable backend automation. Think of chatbots, scheduled tasks, and background data processing pipelines. Instead of maintaining dedicated servers for tasks like sending daily emails or analyzing log files, businesses can offload them to serverless functions. Even large enterprises are adopting this model for microservices architectures, where different components of an app communicate through small, independent functions. Combined with AI workflows—like those we explored in How AI Is Reshaping Dev Workflows—serverless becomes a key enabler for modern, modular, and scalable applications.

The Future of Serverless — What’s Next in 2025 and Beyond

Serverless computing has already disrupted how developers build and deploy applications, but its evolution is far from over. By 2025 and beyond, we’re seeing serverless architectures begin to merge with AI-native workflows, creating a powerful synergy between automation and intelligence. Instead of simply executing functions on demand, serverless will increasingly handle autonomous decision-making. For instance, imagine an AI-driven function that not only processes data but also optimizes its own execution based on resource usage. This vision aligns with the direction we discussed in Agentic AI: The Next Evolution of Artificial Intelligence (2025 Guide), where software agents take on tasks once limited to humans.

Another major trend is the rise of multi-cloud and hybrid serverless solutions. Developers are becoming wary of vendor lock-in, where being tied to AWS Lambda or Azure Functions can limit flexibility and increase costs over time. In response, new platforms are emerging that let teams run serverless functions across multiple providers—or even on private infrastructure. This hybrid approach ensures better compliance, redundancy, and cost optimization. It’s similar to the push we’ve seen in The New Era of Self-Hosting, where developers want greater control without giving up modern scalability.

Useful Links

- Human Programmer Wins Against OpenAI in Tokyo—What This Means for AI Developers

- 🧠 The 7 AI Coding Mistakes That Are Costing You Time, Money & Rankings (2025 Edition)

- Don’t Learn These Tech Skills in 2025 (Unless You Want to Stay Broke)

- Web Performance Lies We Still Believe (And What to Do Instead in 2025)

- Tech Predictions vs Reality: What Actually Happened by 2025?

- Sustainable & Green Coding: The Future of Energy-Efficient Programming

Finally, serverless is expected to integrate more tightly with edge computing. Instead of functions running in distant data centers, they’ll increasingly run on edge nodes closer to users, reducing latency and improving real-time performance. This could revolutionize industries like gaming, autonomous vehicles, and augmented reality. We’ve already seen hints of this in our article on The Rise of Edge AI in 2025, where smart devices gain intelligence at the edge. By merging edge and serverless, developers will be able to deliver lightning-fast, globally distributed apps without needing massive infrastructure teams.

The Downsides Developers Can’t Ignore (Even in the Future)

As exciting as serverless computing is, it’s important to remember that no technology comes without trade-offs. One of the biggest challenges developers face is vendor lock-in. While providers like AWS Lambda, Google Cloud Functions, and Azure Functions make it easy to get started, the deeper your architecture relies on their proprietary services, the harder it becomes to migrate elsewhere. This is especially risky for startups and small businesses that may later find themselves stuck with escalating costs. The cautionary lesson here parallels the issues we covered in Why Developers Are Earning Less in 2025: The Hidden Impact of AI on Rates, where dependency on tools and platforms can silently eat away at margins.

Another downside is cold start latency. While serverless platforms are built for scalability, every new function execution often triggers a “cold start”—a delay caused by spinning up resources. For some applications, like streaming, gaming, or high-frequency trading, even milliseconds of lag can break the user experience. Although providers are improving this with pre-warming and edge-based deployments, it remains a concern. This is particularly critical when compared to the performance-focused discussions we had in Core Web Vitals in 2025, where milliseconds can define SEO rankings.

Finally, serverless can also create observability and debugging challenges. Since functions are distributed and ephemeral, traditional logging and monitoring tools often fall short. Debugging errors across hundreds of micro-invocations is much harder than tracing through a monolithic system. This complexity is why developers are turning to advanced observability stacks, similar to the ones we highlighted in Frontend Observability: Tools for Debugging Real User Experiences. Without robust observability, developers risk losing control over how their apps behave in production.

Conclusion — Why Serverless Still Matters for Developers in 2025

Serverless computing is not just another passing trend—it represents a fundamental shift in how we think about building, scaling, and maintaining software. While it comes with its challenges—vendor lock-in, cold starts, and observability gaps—the long-term benefits often outweigh the drawbacks. Developers who learn to navigate these trade-offs can unlock unprecedented levels of efficiency, scalability, and innovation.

What’s clear is that serverless is not replacing traditional development; instead, it’s becoming an essential part of the broader cloud-native toolkit. For many projects, hybrid approaches that combine serverless with containerization and traditional infrastructure deliver the best results. This mirrors the broader trends we’ve seen in articles like The Rise of Edge AI in 2025 and DevTools as a Service, where flexibility and adaptability remain the defining traits of successful developers.

Looking ahead, developers who embrace serverless early will have a competitive edge. As platforms evolve with faster cold-start times, more transparent pricing, and improved observability, the barriers will continue to shrink. By preparing now, you’re not just future-proofing your skills—you’re positioning yourself at the forefront of a computing revolution that will define the next decade of software.

FAQS

1. What is serverless computing, and how is it different from traditional cloud computing?

Serverless computing allows developers to run applications without managing servers. Unlike traditional cloud hosting, where you provision and maintain virtual machines, serverless platforms like AWS Lambda or Google Cloud Functions automatically allocate resources on demand. You only pay for the compute time your code actually consumes.

2. Is serverless computing cheaper than traditional hosting?

For many use cases—especially workloads with unpredictable or intermittent traffic—serverless can be more cost-effective since you only pay for execution time. However, for applications with heavy and constant usage, traditional hosting or containerization may be more economical. The real cost benefits depend on your workload patterns.

3. What are the main challenges of serverless computing in 2025?

The biggest challenges include vendor lock-in (difficulty moving between platforms), cold-start latency (initial delays when spinning up functions), and limited observability. However, new monitoring tools and hybrid solutions are emerging to address these concerns.

4. Can serverless be used for large-scale enterprise applications?

Yes. In fact, many enterprises are already using serverless for mission-critical workloads, often in combination with microservices and containerized infrastructure. The scalability and rapid development cycles make it especially useful for APIs, event-driven architectures, and backend automation.

5. Is serverless computing the future of software development?

While serverless won’t replace all forms of infrastructure, it’s becoming a vital part of modern software architecture. Developers who embrace serverless today are preparing themselves for a future where efficiency, scalability, and cost-optimization are central to building competitive applications.

🚀 Let's Build Something Amazing Together

Hi, I'm Abdul Rehman Khan, founder of Dev Tech Insights & Dark Tech Insights. I specialize in turning ideas into fast, scalable, and modern web solutions. From startups to enterprises, I've helped teams launch products that grow.

- ⚡ Frontend Development (HTML, CSS, JavaScript)

- 📱 MVP Development (from idea to launch)

- 📱 Mobile & Web Apps (React, Next.js, Node.js)

- 📊 Streamlit Dashboards & AI Tools

- 🔍 SEO & Web Performance Optimization

- 🛠️ Custom WordPress & Plugin Development