Neuromorphic Computing in 2025: How Brain-Inspired Chips Are Redefining AI Performance

Introduction: The Dawn of Neuromorphic AI

As traditional computing architectures hit their limits, neuromorphic chips—processors designed to emulate the human brain’s neural networks—are emerging as the future of AI hardware. By 2025, the neuromorphic computing market is projected to grow at 108% CAGR, with applications spanning robotics, healthcare, and edge devices.

Why This Matters Now?

Need Fast Hosting? I Use Hostinger Business

This site runs on the Business Hosting Plan. It handles high traffic, includes NVMe storage, and makes my pages load instantly.

Get Up to 75% Off Hostinger →⚡ 30-Day Money-Back Guarantee

- Energy Crisis in AI: Data centers consume 2% of global electricity—neuromorphic chips cut this by 1000x.

- Real-Time Demands: Autonomous vehicles and drones need instant decision-making.

- Edge Revolution: The shift from cloud to local processing accelerates adoption.

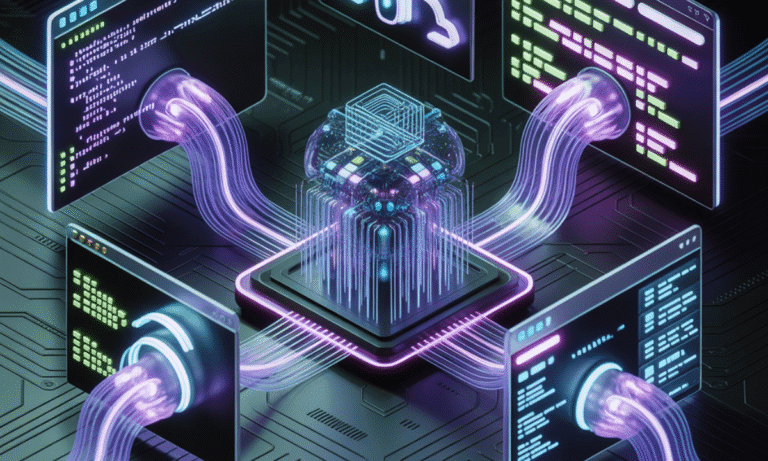

1. How Neuromorphic Chips Work: Silicon That Thinks

These chips differ fundamentally from traditional processors:

| Feature | Conventional Chips | Neuromorphic Chips |

|---|---|---|

| Architecture | Von Neumann | Spiking Neural Nets |

| Power Consumption | 300W+ | 0.1-1W |

| Processing | Sequential | Parallel & Event-Driven |

| Learning | Cloud-dependent | On-device adaptation |

Key Innovation:

- Spiking Neural Networks (SNNs): Neurons communicate via spikes (like biological brains), activating only when needed.

- In-Memory Computing: Eliminates the “memory wall” by processing data where it’s stored.

Example: Intel’s Loihi 3 achieves 10,000x better energy efficiency than GPUs for pattern recognition tasks.

Useful Links

- Microsoft AutoGen vs. CrewAI: I Ran a “Code Battle” to See Who Wins in 2026

- How to Build AI Agents with LangChain and CrewAI (The Complete 2026 Guide)

- Beyond the Chatbot: Why 2026 is the Year of Agentic AI

- Why Developers Are Moving from ChatGPT to Local LLMs (2025)

- LangChain vs. LlamaIndex (2026): Which AI Framework Should You Choose?

- Toil is Back: Why Even with AI, SREs Feel the Burn in 2025

2. Why Neuromorphic Chips Outperform Traditional AI Hardware

A. Unmatched Energy Efficiency

- A single neuromorphic chip (e.g., BrainChip Akida) runs on milliwatts—enough for years of battery life in IoT sensors.

- Comparison: Training GPT-4 required ~50 GWh (powering 5,000 homes/year); neuromorphic equivalents use <1% of that.

B. Real-Time Processing

- Latency: Responds in microseconds vs. milliseconds for GPUs (critical for medical diagnostics).

- Use Case: Prophesee’s event-based cameras detect motion 100x faster than conventional systems.

C. Edge AI Capabilities

- Processes data locally—no cloud dependency for privacy-sensitive apps (e.g., facial recognition).

- Stat: 78% of enterprises now prioritize edge AI with neuromorphic hardware (McKinsey 2025).

3. Top 5 Neuromorphic Chips to Watch in 2025

- Intel Loihi 3 – 10M neurons, ideal for robotics and sensory processing.

- IBM NorthPole – 256M synapses, excels in image/video analysis.

- BrainChip Akida 2 – Enables on-chip learning for consumer devices.

- SynSense Speck – Ultra-low-power vision for AR/VR.

- Qualcomm Zeroth – Optimized for mobile and IoT edge AI.

(For developer kits, see Intel’s Neuromorphic Research Community.)

4. Industry Applications Revolutionized in 2025

- Healthcare: Real-time EEG analysis for epilepsy prediction (Mayo Clinic trials show 95% accuracy).

- Automotive: Mercedes uses neuromorphic chips for collision avoidance at 0.1ms latency.

- Agriculture: Soil sensors with 10-year battery life predict crop diseases.

5. Challenges and Future Outlook

- Software Gap: SNN programming requires new tools (e.g., Intel’s Nx SDK).

- Scalability: Current chips simulate <0.001% of a human brain’s neurons.

- Hybrid Future: Expect neuromorphic+quantum systems by 2030 for complex problem-solving.

Conclusion: The Next Decade of AI Hardware

Neuromorphic computing isn’t just evolutionary—it’s a fundamental breakthrough. As industries from healthcare to defense adopt these chips, they’ll enable AI that’s faster, greener, and more autonomous than ever imagined.

Ready to experiment? Start with Intel’s Loihi developer kit or explore our guide to Edge AI trends.

🚀 Let's Build Something Amazing Together

Hi, I'm Abdul Rehman Khan, founder of Dev Tech Insights & Dark Tech Insights. I specialize in turning ideas into fast, scalable, and modern web solutions. From startups to enterprises, I've helped teams launch products that grow.

- ⚡ Frontend Development (HTML, CSS, JavaScript)

- 📱 MVP Development (from idea to launch)

- 📱 Mobile & Web Apps (React, Next.js, Node.js)

- 📊 Streamlit Dashboards & AI Tools

- 🔍 SEO & Web Performance Optimization

- 🛠️ Custom WordPress & Plugin Development

2 Comments

Leave a Reply

You must be logged in to post a comment.

[…] Neuromorphic Computing in 2025: How Brain-Inspired Chips Are Redefining AI Performance […]

[…] https://devtechinsights.com/neuromorphic-chips-2025/ […]