LangChain vs. LlamaIndex (2026): Which AI Framework Should You Choose?

Table of Contents

The LLM ecosystem exploded in 2023–2024 and in 2026 it’s matured into a practical toolbox for building real AI apps. If you’re building retrieval-augmented systems, chatbots, or agentic apps, two framework names come up again and again: LangChain and LlamaIndex (formerly GPT Index). Both are battle-tested, popular, and actively developed — but they solve subtly different problems.

This guide helps you choose the right tool for your project by comparing architecture, core features, performance trade-offs, integrations, community adoption, and real-world use cases. I’ll also summarize where teams combine both frameworks for the best of both worlds.

Need Fast Hosting? I Use Hostinger Business

This site runs on the Business Hosting Plan. It handles high traffic, includes NVMe storage, and makes my pages load instantly.

Get Up to 75% Off Hostinger →⚡ 30-Day Money-Back Guarantee

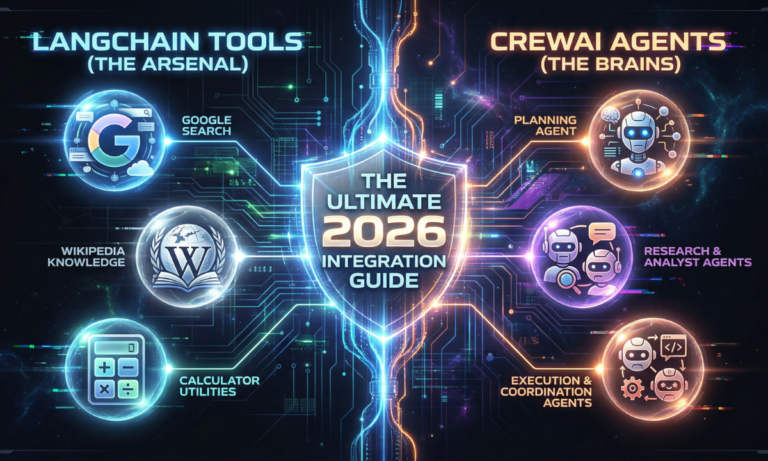

Key takeaway up front: LangChain is an orchestration and agent framework (workflows, tools, agents, memory). LlamaIndex is a data-first indexing and retrieval framework (fast RAG, vector indices, flexible connectors). Which you pick depends on whether your app centers on complex multi-step workflows and agents or fast, scalable document retrieval and knowledge indexing.

Quick overview

- LangChain — a developer toolkit for building LLM-powered apps. It provides chains, tools, agents, memory, integrations with LLMs, and an ecosystem for shipping agentic and orchestration workflows. LangChain has grown into an ecosystem with paid products and an open-source core.

- LlamaIndex — a data framework focused on turning documents and knowledge sources into queryable indices for LLMs. It specializes in ingestion, indexing (vectors, hierarchical indices), and efficient retrieval for RAG (retrieval-augmented generation) workflows.

Why this distinction matters (short version)

RAG systems have two main parts: (1) where you store and index knowledge, and what the model does with that knowledge. LlamaIndex is explicitly crafted for

(1) — efficient indexing, vector stores, and retrieval. LangChain specializes in

(2) — chaining LLM calls, connecting tools/APIs, composing agent behaviors, and orchestrating multi-step logic. If you need both, they’re frequently used together.

Feature breakdown

1) Core purpose

- LangChain: Orchestration, agents, workflows, memory abstractions. It’s ideal when your app needs to call tools, perform multi-step reasoning, maintain session memory, or combine many LLM calls into a single user flow. Think “agentic” systems and pipelines.

- LlamaIndex: Data ingestion, indexing, vector stores, and retrieval. It’s purpose-built for converting documents (PDFs, HTML, DBs) into indices that LLMs can query quickly and reliably.

2) Data & retrieval

- LangChain: Has connectors to vector stores (Chroma, Pinecone, FAISS, Milvus). It supports basic indexing workflows, but retrieval logic is usually built by wiring components together. Good for custom retrieval embedded inside larger workflows.

- LlamaIndex: Comes with rich indexing structures (VectorStoreIndex, TreeIndex, ListIndex, etc.) and first-class support for building and querying indices; optimized for RAG performance and developer ergonomics for document-driven agents.

3) Agents, tools, and workflow

- LangChain: Ships with agent frameworks, tool integrations (search, browsing, code-executors, APIs), memory layers, and LangGraph / LangSmith tooling for productionizing agents. If your app needs to call external APIs or perform multi-turn planning, LangChain is the straightforward choice.

- LlamaIndex: Focuses on creating a crisp interface between documents and LLMs; agentic behavior can be composed on top of LlamaIndex results, but agent orchestration itself is not the core offering. LlamaIndex often plugs into a separate orchestration layer (including LangChain).

4) Integrations and vector stores

- LangChain: Broad ecosystem of connectors and support for many vector stores, model providers, and tool plugins. It’s designed as a hub for integrating LLMs and tooling.

- LlamaIndex: Also supports multiple vector stores and has optimized connectors for storage backends; it emphasizes indexing strategies and retrieval tuning (e.g., chunking, summarization).

5) Community, docs, and company support

- LangChain: Big community, heavy adoption, company offering (LangChain products) and active case studies. Large repo and many community projects.

- LlamaIndex: Rapidly growing, strong for RAG-focused projects, active docs and case studies showing enterprise adoption.

Real-world use cases where each shines

Choose LangChain when:

- You need agentic behavior — a system that plans and uses tools (search, calculators, APIs). Examples: research assistants that browse or execute code, multi-step task automation, and systems that require orchestration between LLMs and third-party services. LangChain’s agent and tool primitives make these apps straightforward to implement.

Choose LlamaIndex when:

- Your core problem is document understanding and retrieval — large knowledge bases, legal documents, support corpora, or any dataset that needs efficient vector indexing and relevance tuning. LlamaIndex’s index structures and retrieval options make it fast to prototype strong RAG systems.

Combine them when:

- You want the best of both: LlamaIndex for ingestion & retrieval, and LangChain for orchestration & agents. This combo is common: LlamaIndex returns the most relevant docs; LangChain organizes the multi-step flow (ask follow-up questions, call tools, summarize, write outputs). Many production teams use both in a pipeline.

Performance & benchmarks (what the community is seeing)

Benchmarks differ by workload and configuration, but a common pattern emerges in community reports and recent write-ups:

- LlamaIndex tends to be leaner and faster for document retrieval and indexing out of the box due to its focused indexing structures and optimizations. Several hands-on comparisons show faster retrieval speeds and lower overhead for RAG tasks.

- LangChain offers broader functionality, which can mean more flexibility but also more moving parts; retrieval performance depends heavily on the vector store and indexing approach you choose. If you implement efficient indexing (or combine LlamaIndex), LangChain-based systems can be equally performant.

Bottom line: For pure RAG performance, LlamaIndex often wins on simplicity and raw speed; for complex agentic workflows or multi-tool orchestration, LangChain gives you the primitives to build robust systems. Where performance matters, test both with your data and retrieval backends — benchmarks are highly workload-dependent.

Adoption & case studies (who uses what)

Both frameworks document customer stories and case studies — useful signals for enterprise adoption.

- LangChain publishes customer stories showing production use across industries like healthcare, telecom, and media (e.g., City of Hope, Bertelsmann), and its ecosystem includes LangSmith and LangGraph for operational tooling. Those case studies demonstrate agentic, multi-system workflows in production.

- LlamaIndex shows case studies where businesses built knowledge assistants and RAG pipelines — for example, GymNation and other enterprises using LlamaIndex to enhance domain-specific search and agent capabilities.

These customer stories show both libraries are used in production, but often for different primary reasons: LangChain for agents and orchestration, LlamaIndex for document-centric knowledge systems. Use case alignment matters more than headline adoption numbers.

Developer ergonomics: DX, APIs, and learning curve

- LangChain: Rich API surface — chains, agents, memory, tool interfaces. Powerful but more concepts to learn. If you need complex behavior, the upfront learning pays off. LangChain’s docs and growing community tutorials help flatten the curve.

- LlamaIndex: Focused API for ingestion and index querying. Easier to pick up if your primary job is RAG or building knowledge graphs. Good docs for indexing patterns, vector stores, and retrieval tuning.

If you’re new to LLM apps and only need RAG, LlamaIndex is often faster to prototype. If your app’s complexity grows (agents, tools, multi-step reasoning), you’ll appreciate LangChain’s abstractions. Many teams start with LlamaIndex for quick RAG prototypes and layer in LangChain when they need agentic behaviors.

Cost considerations & vendor lock-in

- Both libraries are open-source and support multiple backends for models and vector stores — you can avoid vendor lock-in by choosing open vector stores (FAISS, Milvus) and self-hosted LLMs. However, the economics change if you use managed vector stores (Pinecone, Weaviate) or hosted model APIs.

- LangChain’s commercial product suite (LangSmith, LangGraph) provides observability and production tooling — useful for teams that want a managed path but may increase vendor coupling if adopted end-to-end. LlamaIndex also provides enterprise features and integrations (e.g., cloud database connectors). Consider your long-term export and migration plan when selecting managed add-ons.

Community perspective: what devs actually say

I aggregated community sentiment from threads, blog posts, and community hubs to avoid cherry-picking:

Useful Links

- The Era of Small Language Models (SLMs): Why 2026 Belongs to Edge AI

- Microsoft AutoGen vs. CrewAI: I Ran a “Code Battle” to See Who Wins in 2026

- How to Build AI Agents with LangChain and CrewAI (The Complete 2026 Guide)

- Beyond the Chatbot: Why 2026 is the Year of Agentic AI

- Why Developers Are Moving from ChatGPT to Local LLMs (2025)

- Toil is Back: Why Even with AI, SREs Feel the Burn in 2025

- Reddit / r/LangChain and r/MLDev threads show enthusiastic adoption for agents and tool-driven workflows; developers praise LangChain for reducing boilerplate around agents. They also discuss complexity and the need for robust testing. Example community posts highlight small wins (quick prototypes, GitHub repos, agent demos).

- Medium and independent blog posts frequently benchmark LangChain vs LlamaIndex; authors often conclude LlamaIndex is leaner for retrieval while LangChain is better for orchestration. Practical guides show how to combine both. Recent Medium write-ups provide hands-on benchmarks and trade-off analyses.

- Dev.to / Hashnode posts emphasize DX: beginners like LlamaIndex for RAG prototypes; more advanced teams use LangChain for production agents and then connect optimized indices from LlamaIndex or vector DBs. Community posts are full of “how I built X” case studies that repeatedly use both frameworks together.

Takeaway: community consensus is pragmatic — neither library is strictly “better”; they excel at different layers, and many production stacks use them together.

Practical recipes: how to architect with either (or both)

Below are practical blueprints you can copy/paste into your architecture planning.

Recipe A — Fast RAG prototype (LlamaIndex-first)

- Ingest documents (PDFs, DOCX, webpages) into LlamaIndex.

- Build a

VectorStoreIndex(orTreeIndexfor hierarchical docs). - Use a lightweight LLM (open-source or API) to perform retrieval + answer generation via LlamaIndex’s query interface.

- Add a simple web UI for question/answering.

Why: Minimal plumbing; fast feedback loop and excellent retrieval performance.

Recipe B — Agentic assistant (LangChain-first)

- Use LangChain agents to define tasks (search web, run SQL, call APIs).

- Add memory and tool connectors (search, calculator, browser).

- For document lookup, either embed a vector store directly or call LlamaIndex as a retrieval microservice.

- Add monitoring/observability via LangSmith or custom telemetry.

Why: Orchestration-first; ideal for workflows that require decision-making and external tool usage.

Recipe C — Hybrid (production-grade)

- Ingest & index all documents with LlamaIndex (optimized indices).

- Expose a retrieval API (microservice) for top-k document results with embeddings & metadata.

- Use LangChain to orchestrate multi-step flows: call the retrieval API, run agents that fetch additional data, perform transformations, and produce final outputs.

- Use LangChain or third-party telemetry for traces and error handling.

Why: Scales well, separates concerns, and leverages each framework’s strengths.

Production concerns, testing & observability

- Testing — Mock vector stores and LLM responses; write unit tests for retrieval logic and contract tests for API responses. LangChain plus LangSmith can make agent testing more manageable.

- Monitoring — Monitor latency of retrieval vs. model calls, failure rates in tool invocations, and index freshness. LangChain’s commercial tooling and existing observability stacks can help here.

- Security & Data Governance — For private corpora, configure encryption at rest for vector stores and control access to retrieval APIs. Consider schema for metadata to track data consent and provenance. LlamaIndex supports enterprise connectors (e.g., AlloyDB) to store indices securely.

Which should you choose? Quick decision table

| Scenario | Pick LangChain | Pick LlamaIndex | Use Both |

|---|---|---|---|

| You need agents, tools, workflows | ✅ | ❌ | ✅ (LangChain orchestrates) |

| Pure document search / knowledge base | ❌ | ✅ | ✅ (LlamaIndex for retrieval) |

| Fast prototype, few components | ❌ | ✅ | ✅ |

| Production agents with external APIs | ✅ | ❌ | ✅ |

| Want simplest path to RAG accuracy | ❌ | ✅ | ✅ |

Final verdict & future outlook

- LangChain — the orchestration and agent powerhouse. Choose it when your value depends on automating multi-step workflows, integrating tools, or building agents.

- LlamaIndex — the indexing and retrieval specialist. Choose it when your value is in turning documents into knowledge (support portals, legal corpora, internal wiki search).

- Combine both when you need best-in-class retrieval and agentic orchestration — that’s where many production systems land. Community guides, case studies, and benchmarks show this pattern repeatedly.

The LLM ecosystem will continue evolving — expect better integration primitives, faster vector stores, cheaper embeddings, and more mature observability. In 2025 the pragmatic answer is rarely “one framework to rule them all”; it’s “choose the right tool for the layer you’re solving, and stitch responsibly.”

FAQs

1) Is LangChain better than LlamaIndex?

2) Can I use LangChain and LlamaIndex together?

3) Which is faster for RAG?

4) Which one is easier for beginners?

🚀 Let's Build Something Amazing Together

Hi, I'm Abdul Rehman Khan, founder of Dev Tech Insights & Dark Tech Insights. I specialize in turning ideas into fast, scalable, and modern web solutions. From startups to enterprises, I've helped teams launch products that grow.

- ⚡ Frontend Development (HTML, CSS, JavaScript)

- 📱 MVP Development (from idea to launch)

- 📱 Mobile & Web Apps (React, Next.js, Node.js)

- 📊 Streamlit Dashboards & AI Tools

- 🔍 SEO & Web Performance Optimization

- 🛠️ Custom WordPress & Plugin Development