Why Nearly Half of AI-Generated Code Has Security Flaws—and How Developers Can Fix It in 2025

Table of Contents

Introduction

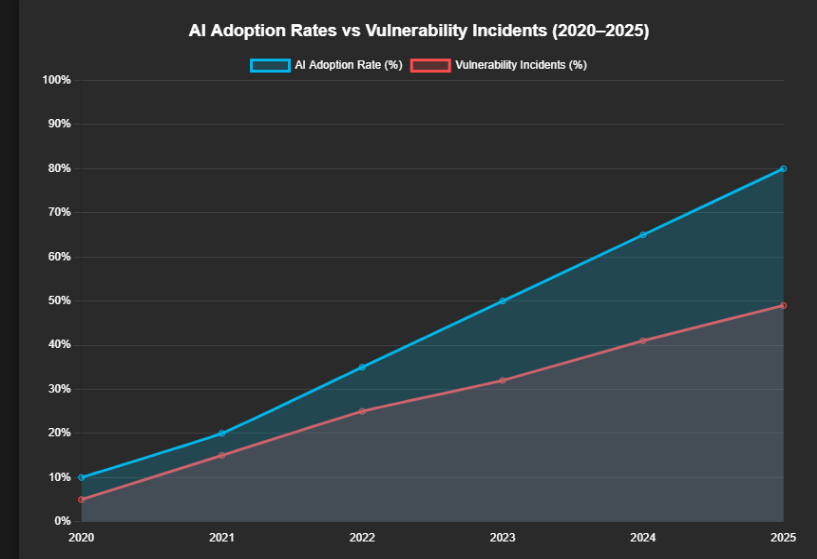

💥 Did you know that nearly 50% of AI-generated code in 2025 contains critical security flaws?

That’s not a fear-mongering headline — it’s a hard reality backed by multiple industry reports. As AI coding assistants like GitHub Copilot, Codeium, Tabnine, and Replit AI become everyday tools for millions of developers, the convenience they bring is matched by an invisible risk: code vulnerabilities hiding in plain sight.

2025 marks a turning point in how we write and deploy code. With more than 70% of developers now using AI-assisted tools for at least part of their workflow, we’ve entered what I call the AI-native era of development. While this revolution speeds up delivery cycles and lowers the barrier for new programmers, it also opens the floodgates for flawed code to seep into production systems — potentially exposing businesses to hacks, data leaks, and compliance nightmares.

Need Fast Hosting? I Use Hostinger Business

This site runs on the Business Hosting Plan. It handles high traffic, includes NVMe storage, and makes my pages load instantly.

Get Up to 75% Off Hostinger →⚡ 30-Day Money-Back Guarantee

From my own perspective as both a developer and tech blogger, I’ve tested dozens of these AI coding tools hands-on. The results are a mixed bag. On one hand, they’ve cut my coding time in half and boosted productivity in ways I never imagined. On the other, I’ve repeatedly caught AI-generated snippets that looked flawless but contained subtle yet dangerous security gaps — the kind that a busy dev might easily miss.

This blog dives into the uncomfortable truth behind AI-generated code in 2025, showing both its light side (speed, efficiency, accessibility) and its dark side (security risks, hidden vulnerabilities, and overreliance). More importantly, I’ll share practical strategies every developer can use to make sure that speed doesn’t come at the cost of security.

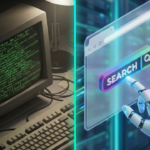

2. The Alarming Numbers Behind AI-Generated Code

The numbers don’t lie — and they’re terrifying. According to multiple security studies published in early 2025, nearly half of all AI-generated code contains at least one security vulnerability. That’s a staggering rise compared to 2023, when only around 32% of AI-assisted code showed significant flaws.

These vulnerabilities range from minor inefficiencies to critical exploits, such as:

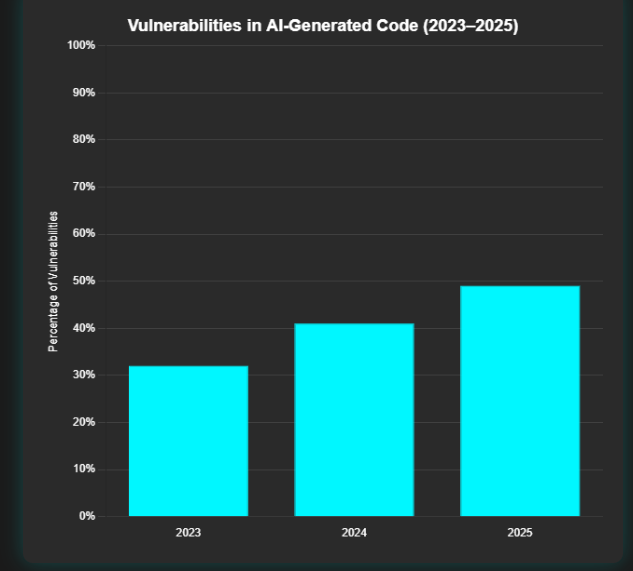

- SQL injections automatically introduced by poorly sanitized AI-generated queries.

- Cross-Site Scripting (XSS) vulnerabilities embedded in JavaScript snippets.

- Hardcoded credentials or API keys, sometimes fabricated by AI tools themselves.

And the risks aren’t hypothetical. In late 2024, a U.S.-based fintech startup reported a massive breach traced back to an AI-generated login function. While the code appeared clean, it skipped essential input validation, allowing attackers to inject malicious payloads. Another case in Europe saw an e-commerce giant’s checkout system compromised after developers unknowingly pushed AI-generated code that mishandled session tokens.

These incidents highlight an uncomfortable reality: while AI tools accelerate development, they can also accelerate the spread of flawed, insecure code into production systems.

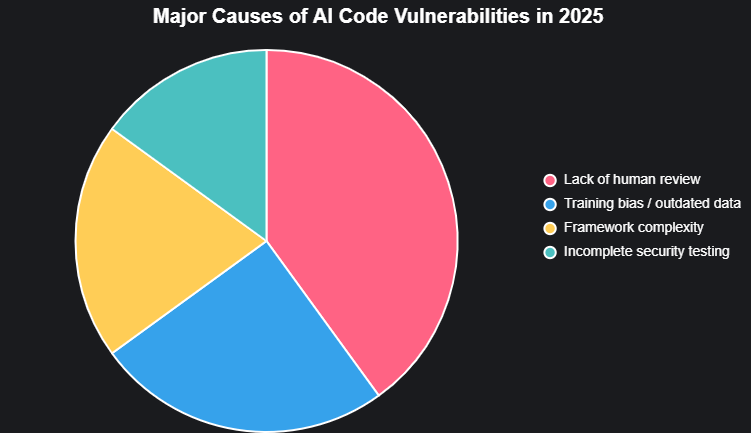

3. Why AI-Generated Code Is So Vulnerable

It’s tempting to believe that if AI-generated code runs without errors, it’s safe. Unfortunately, that assumption is dangerously wrong. Let’s break down why so much AI-generated code is riddled with vulnerabilities:

1. Lack of Context in AI Models

Large Language Models (LLMs) like GitHub Copilot or Codeium don’t “understand” your full project context. They generate code based on statistical patterns in training data. That means they may produce snippets that look perfectly fine syntactically but lack crucial security considerations.

2. Biases and Outdated Training Data

Most AI coding assistants are trained on publicly available code from repositories. But here’s the catch: a lot of that code was written before modern security practices became standard. If a training set includes insecure practices, the AI can (and often does) reproduce those flaws at scale.

3. Over-Reliance by Junior Developers

Many junior developers — or even non-developers experimenting with AI coding assistants — trust these tools blindly. Instead of verifying each snippet, they copy-paste into production, assuming “AI must know best.” This creates pipelines of insecure code, especially in startups and small businesses without dedicated security teams.

4. Code That “Looks Right” but Fails Security Audits

AI-generated code often passes basic tests and compiles correctly, which tricks developers into thinking it’s safe. But when security audits run, the code fails disastrously — missing essential checks like input sanitization or proper authentication handling.

4. The Hidden Dark Side Big Tech Won’t Tell You

While AI coding assistants promise speed and convenience, there’s a darker side that tech giants rarely highlight. Let’s peel back the glossy marketing:

Proprietary Lock-Ins: Developers Relying Blindly on Copilot

When developers build their workflows entirely around tools like GitHub Copilot or Amazon CodeWhisperer, they often lose the ability to code independently. The more you rely, the more you get locked into a proprietary ecosystem. And here’s the kicker: once you’re in, switching costs skyrocket — not just in money, but in retraining your skills and pipelines.

AI-Generated Backdoors (Intentional or Accidental)

Imagine code that looks legitimate but has a subtle backdoor slipped in by an AI assistant. Was it a hallucination? A training data artifact? Or something more sinister inserted through poisoned training sets?

Even if accidental, the result is the same: your codebase is compromised without your knowledge.

The Myth of “AI = Secure by Default”

Big Tech often markets AI coding as foolproof. But let’s be blunt: no AI model inherently understands your business logic, security compliance requirements, or threat model. Believing otherwise is like assuming autopilot will always prevent a plane crash — it doesn’t work that way.

Potential for AI Supply Chain Attacks

We’ve entered an era where an attacker doesn’t need to compromise your servers directly. They can target the AI model you trust, injecting poisoned training data or malicious prompt instructions that lead to flawed outputs. This form of AI supply chain attack is already being tested in the wild — and most developers don’t even know it.

5. How Security Flaws Slip Through the Cracks

The scary part about AI-generated vulnerabilities isn’t that they happen — it’s how easily they go unnoticed until it’s too late.

No Human Review in Fast AI Pipelines

In the age of continuous integration and delivery (CI/CD), speed is everything. But AI tools encourage a mindset of “generate → commit → deploy” without thorough review. When human oversight is skipped, subtle flaws slip right into production.

Complexity in Modern Frameworks + AI Guesswork

Today’s frameworks (like Next.js, Angular, or Django) are complex ecosystems with hundreds of moving parts. AI often “guesses” the right code patterns. Unfortunately, guessing isn’t good enough when one wrong line can expose a database.

Incomplete Test Coverage

Even when developers run automated tests, they usually test functionality, not security flaws. For example, a login form test might confirm the user can sign in — but not whether SQL injection is possible. AI-generated code can pass all functional tests while still being deeply insecure.

Developers Trusting “Green Checkmarks” in IDEs

Many IDEs now highlight AI code suggestions with reassuring indicators. The problem? A green checkmark only means the code compiles or looks syntactically correct — not that it’s secure. This false sense of safety leads developers to trust flawed code without deeper audits.

6. How Developers Can Take Back Control (The Fixes)

If nearly half of AI-generated code has flaws, does that mean we abandon AI altogether? Not at all. The key is taking back control and using AI responsibly — not blindly. Here’s how developers in 2025 can make sure AI serves them, not the other way around.

Useful Links

- The Era of Small Language Models (SLMs): Why 2026 Belongs to Edge AI

- Forget Selenium: Building AI Agents with browser-use & DeepSeek (The New 2026 Standard)

- Microsoft AutoGen vs. CrewAI: I Ran a “Code Battle” to See Who Wins in 2026

- How to Build AI Agents with LangChain and CrewAI (The Complete 2026 Guide)

- Beyond the Chatbot: Why 2026 is the Year of Agentic AI

- Why Developers Are Moving from ChatGPT to Local LLMs (2025)

✅ Code Reviews: Mandatory Peer + AI Audit Combo

Even if AI generates code, every pull request should go through a human peer review. To strengthen this, add a secondary AI audit that specifically checks for security risks. This dual-review system balances speed with safety.

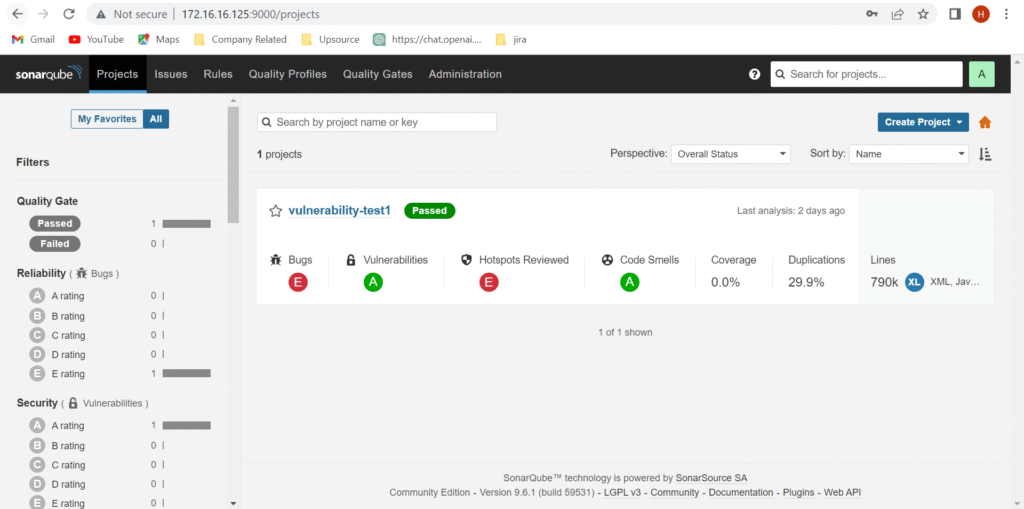

🛠 Static Analysis Tools: Your Safety Net

Don’t rely solely on your IDE’s green checkmarks. Tools like SonarQube, Semgrep, and Bandit can automatically scan AI-generated code for vulnerabilities such as SQL injection, cross-site scripting (XSS), or insecure dependencies. These tools often catch what humans miss.

📜 Secure Coding Practices: Back to the Basics

AI can only be as safe as the standards it’s asked to follow. Developers must enforce:

- OWASP Top 10 guidelines

- Dependency and package vulnerability checks

- Regular patching and updating of libraries

By embedding security in the development lifecycle, even AI code gets forced through a safety-first filter.

🧠 AI Prompt Engineering: Write for Security

The quality of AI output is tied to the quality of the prompt. Instead of asking Copilot to “write a login function,” specify:

“Write a secure login function following OWASP standards and using parameterized queries.”

This single tweak can mean the difference between a vulnerable app and a secure one.

🔒 Self-Hosting AI Tools: Reducing the Risk

Cloud-based coding assistants raise serious questions:

- Where is your proprietary code being stored?

- Could an AI vendor inadvertently leak snippets of your code to others?

By running self-hosted AI models locally, developers eliminate the risk of third-party exposure. It’s slower, yes — but for sensitive applications, privacy beats convenience.

7. The Future of AI Security in Development

Looking ahead, 2025 is not the peak — it’s the pivot point. The next two years (2025–2027) will redefine how developers think about security in an AI-driven era.

🔮 Predictions for 2025–2027

- AI Tools That Self-Check for Vulnerabilities

Expect the rise of coding assistants that not only generate code but also automatically audit their own output against OWASP and other security standards. Imagine GitHub Copilot 2.0 flagging its own insecure SQL query before you ever see it. - Agentic AIs in Code Auditing

Agentic AIs — autonomous systems capable of making decisions without constant human input — will soon dominate security reviews. They’ll run continuous code audits in the background, alerting teams when risks emerge. - Cybersecurity as the Top Dev Skill

Forget the old idea that cybersecurity is just for specialists. By 2027, the most in-demand developers will be those who can blend coding with active security analysis.

🌗 Light Side vs. Dark Side Summary

- The Light Side

- Faster, more efficient development cycles

- Self-healing code with AI auto-patching

- Reduced dependency on manual security testing

- The Dark Side

- Bigger attack surface due to AI-powered automation

- Stealthier, AI-generated exploits that evade detection

- Overconfidence in “AI = secure by default” leading to massive breaches

In short: AI is both the shield and the sword. The developers who thrive will be those who learn to wield it wisely.

8. Conclusion

In 2025, developers face a difficult truth: the speed of AI-generated code often comes at the cost of security. While tools like Copilot and Codeium have changed how we build software, they’ve also introduced vulnerabilities at a scale we’ve never seen before.

Speaking from my own experience, I’ve started auditing every single AI-generated snippet before using it in production. It’s not about mistrusting the AI — it’s about acknowledging that no model understands your project’s security requirements as well as you do. I’ve learned that a little extra time spent reviewing code now can prevent catastrophic breaches later.

If you take one thing away from this article, let it be this:

👉 Never let speed compromise security.

As developers, we’re responsible not only for writing code but also for protecting the users who rely on it.

The future of AI in coding isn’t about rejecting these tools — it’s about using them wisely and securely.

Start today. Add a security audit step to your workflow. Use static analysis tools. Train yourself and your team on secure coding practices. And when in doubt, ask not just “Does this code work?” but “Is this code safe?”

9. FAQ Section

Q1: Is AI-generated code safe to use in production?

Not by default. Studies show that nearly 50% of AI-generated code contains vulnerabilities. Always perform manual reviews and run static analysis tools before deploying.

Q2: Which AI coding assistant is most secure in 2025?

No tool is 100% secure. However, Copilot, Codeium, and Tabnine have recently integrated more robust security checks. Still, your review process matters more than the assistant itself.

Q3: How can I check if my AI-generated code has flaws?

Use tools like SonarQube, Semgrep, Bandit, and OWASP Dependency-Check. Pair these with peer reviews and penetration testing for best results.

Q4: Should I self-host AI models for better security?

Yes, if possible. Self-hosting reduces exposure to third-party risks and data leaks. Tools like Ollama or LocalAI allow for local control without sending code to external servers.

Q5: What are the top tools for auditing AI code today?

SonarQube — for comprehensive code quality and vulnerability scanning

Semgrep — lightweight static analysis with customizable rules

Bandit — Python-focused security scanner

OWASP Dependency-Check — detects known vulnerable libraries

Burp Suite (for web apps) — penetration testing at runtime

🚀 Let's Build Something Amazing Together

Hi, I'm Abdul Rehman Khan, founder of Dev Tech Insights & Dark Tech Insights. I specialize in turning ideas into fast, scalable, and modern web solutions. From startups to enterprises, I've helped teams launch products that grow.

- ⚡ Frontend Development (HTML, CSS, JavaScript)

- 📱 MVP Development (from idea to launch)

- 📱 Mobile & Web Apps (React, Next.js, Node.js)

- 📊 Streamlit Dashboards & AI Tools

- 🔍 SEO & Web Performance Optimization

- 🛠️ Custom WordPress & Plugin Development