Ethical Data Collection and Privacy by Design: Dev Practices You Need to Implement

Table of Contents

Introduction: Why Privacy-First Matters in 2025

Data has become the world’s most valuable currency, but it’s also one of the riskiest. Every click, swipe, and tap generates personal insights. Developers stand at the frontlines of this responsibility. A single careless API call, insecure logging practice, or overzealous analytics setup can break trust and even attract lawsuits.

In 2025, privacy is not just a compliance checkbox. It’s a core product value, a competitive differentiator, and a legal necessity. As a developer, ignoring it is no longer an option.

Need Fast Hosting? I Use Hostinger Business

This site runs on the Business Hosting Plan. It handles high traffic, includes NVMe storage, and makes my pages load instantly.

Get Up to 75% Off Hostinger →⚡ 30-Day Money-Back Guarantee

This blog explores what ethical data collection means, how to apply Privacy by Design (PbD), real-world case studies of companies getting it right—and wrong—and concrete dev practices you can start applying today.

What Does “Ethical Data Collection” Really Mean?

Ethical data collection goes beyond GDPR, CCPA, or India’s DPDP Act. It’s not about what the law allows but what users would reasonably expect.

- Transparency → Tell users what you collect, why, and how long you’ll keep it.

- Consent → Make opting in (not just opting out) the default.

- Minimization → Collect only what you actually need.

- Security → Store, encrypt, and transmit responsibly.

- User Control → Provide deletion, export, and preference management.

Developer perspective: It’s tempting to log everything for “future analytics.” I’ve been guilty of this too. But in practice, 80% of that data never gets used. Worse, it increases breach risk. Ethical collection often aligns with efficient engineering.

Privacy by Design: The 7 Principles Every Dev Should Apply

Privacy by Design (PbD), first proposed by Ann Cavoukian, has evolved into a set of practical guidelines. Here’s how they map to developer practices in 2025:

- Proactive not reactive → Build security before launch, not after an incident.

- Example: GitHub now enforces secret scanning proactively, instead of waiting for leaked API keys.

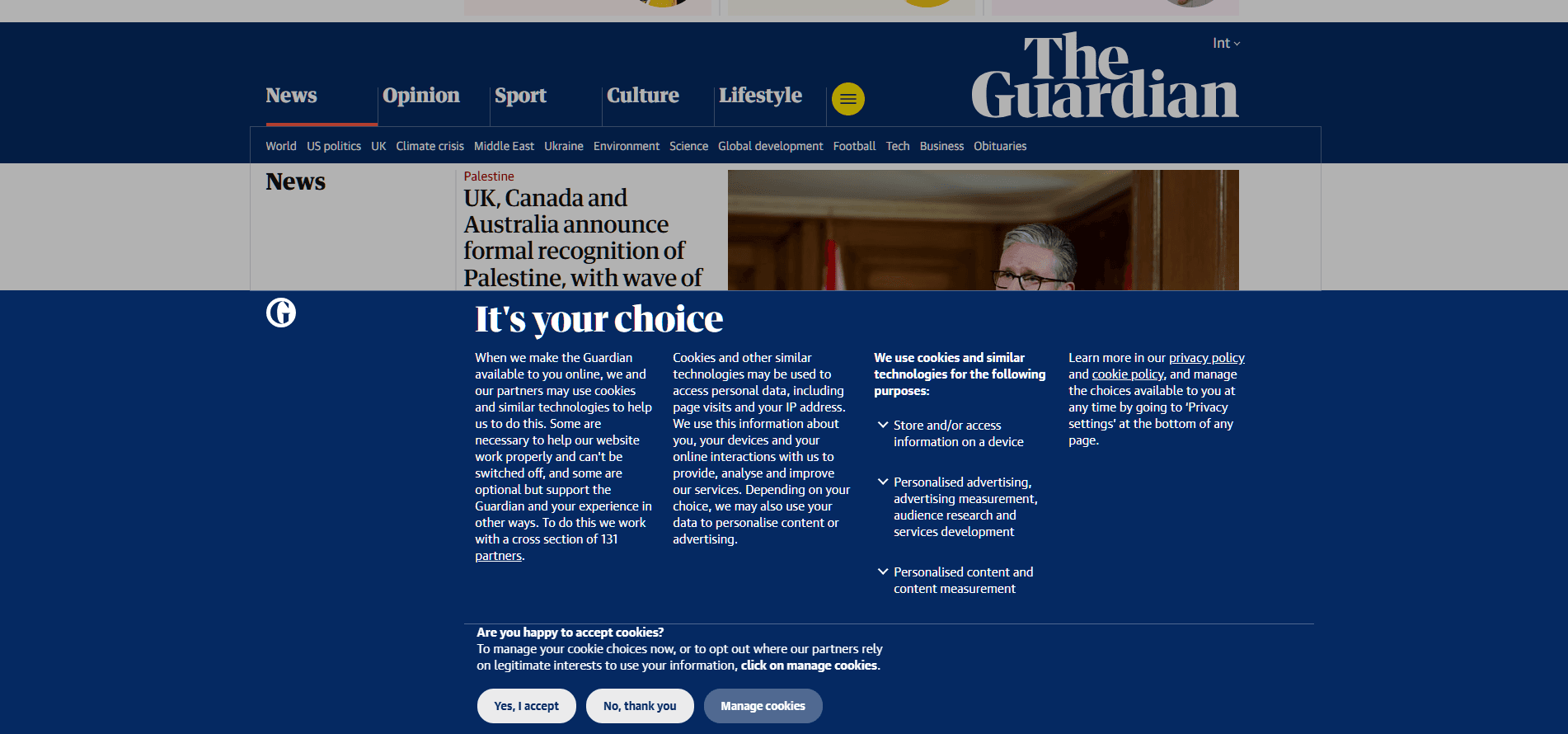

- Privacy as default → Assume “opt-out” unless explicitly given consent.

- Example: Apple’s App Tracking Transparency (ATT) made IDFA tracking opt-in, reshaping the ad industry.

- Privacy embedded into design → Bake it into architecture, not bolt it on.

- Example: Signal built end-to-end encryption into its core, not as an optional feature.

- Full functionality → Avoid false trade-offs between privacy and usability.

- Example: DuckDuckGo’s search proves you can have relevant results without invasive profiling.

- End-to-end security → Encrypt data at rest, in transit, and sometimes in use (homomorphic encryption is growing).

- Example: WhatsApp’s backups are now fully encrypted.

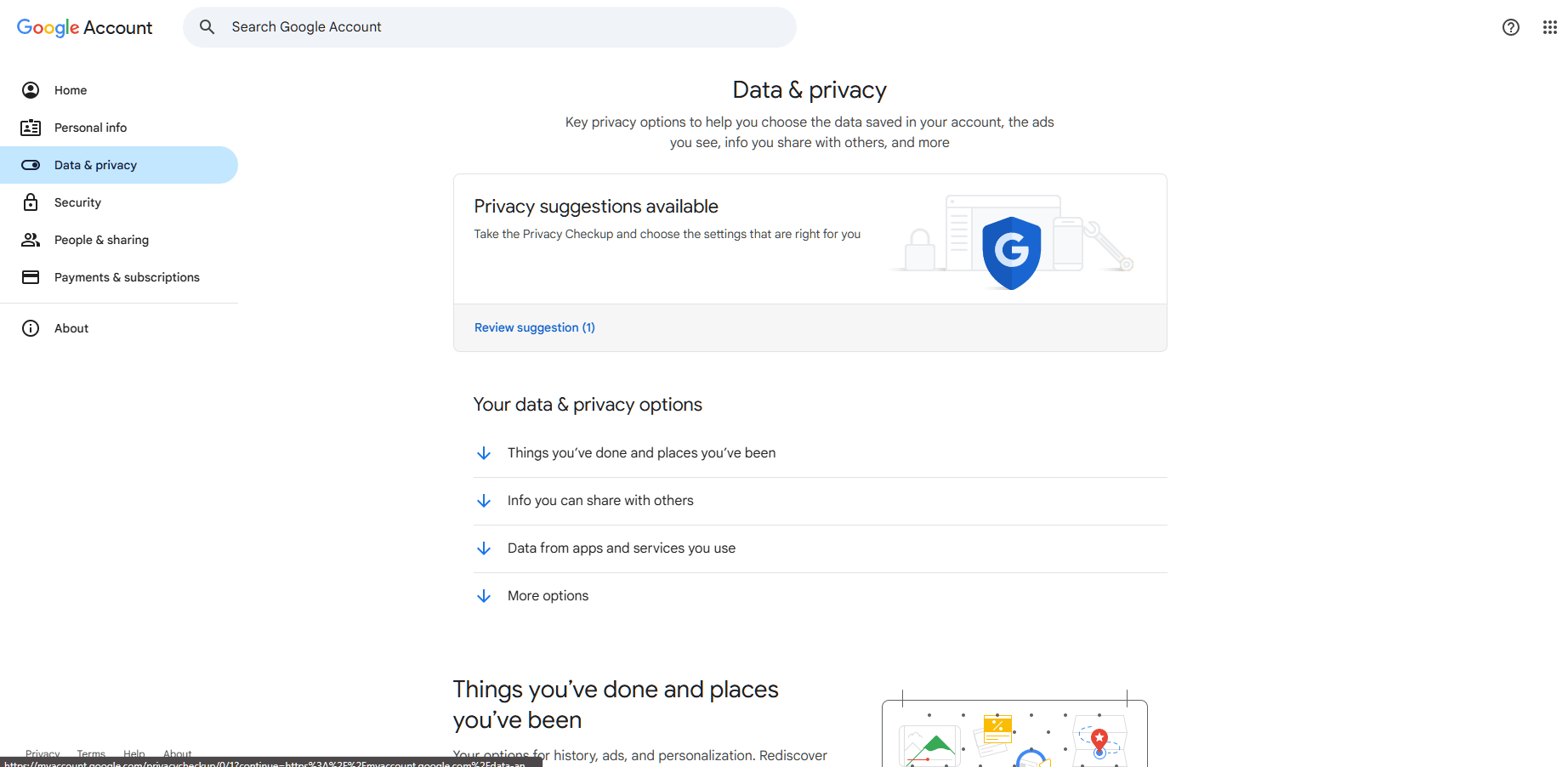

- Visibility and transparency → Provide clear privacy dashboards.

- Example: Google’s revamped My Ad Center shows what data influences ad targeting.

- Respect for user privacy → Keep controls simple, not buried in settings.

- Example: Brave browser blocks third-party trackers by default without requiring setup.

| Principle | Meaning | Example in Practice |

|---|---|---|

| Proactive not Reactive | Prevent issues before they occur | Default encryption of user data |

| Privacy as Default | Users don’t need to opt-in for protection | Apps not collecting GPS unless necessary |

| End-to-End Security | Protect data throughout lifecycle | TLS for transmission, AES for storage |

Case Studies: The Good, the Bad, and the Fined

The Bad: Meta’s $1.3 Billion Fine

In 2023, Meta was fined €1.2 billion by the EU for mishandling transatlantic data transfers. The issue wasn’t hacking; it was architectural negligence. Developers had systems that didn’t align with evolving legal frameworks.

Lesson for devs: Architecture choices have compliance implications. Don’t assume today’s design will pass tomorrow’s law.

The Good: Apple’s ATT Framework

Apple’s ATT (App Tracking Transparency) rollout forced developers to request explicit tracking permission. While controversial, it demonstrated how privacy-first architecture can reshape entire industries.

Lesson for devs: Sometimes protecting privacy can actually become a brand advantage.

Startup Example: Figma

Figma minimized data collection early by focusing on collaborative features, not surveillance. Their growth wasn’t slowed by privacy limitations; instead, they won developer trust.

Lesson: Small teams benefit from lean data—fewer systems to secure, less risk if breached.

We’ll see more on-device AI, federated learning, and modular compliance frameworks. Privacy will become a core product differentiator, and companies that embrace it—like Proton and Brave—will gain stronger user loyalty and competitive advantage.

If you want more details with enhanced visuals, then see the pdf below

Download for Free!Developers’ Voices: What the Community Thinks

I pulled insights from Reddit (r/privacy, r/webdev), Hacker News, and Dev.to threads from late 2024–2025. Here’s the pulse:

- “Default to not collecting. If you think you need it, prove it to yourself first.” (Reddit)

- “Consent banners became dark patterns. True ethics means making ‘No’ as easy as ‘Yes’.” (Hacker News)

- “As a dev, my manager wanted all analytics. I pushed back—ended up saving infra costs by half.” (Dev.to)

- “Privacy-first design doesn’t slow velocity. It forces you to think about what matters.” (Reddit)

As a developer myself, I resonate with this. Many times I’ve seen “just add tracking” requests balloon into architectural overhead. When I implemented minimization, not only did security improve, but infra bills dropped.

Useful Links

- Best API Security Platforms for Developers in 2025

- Cyber Hygiene 2025: Small Mistakes That Still Lead to Big Breaches

- End-to-End Encryption for Developers: Best Practices in 2025

- Cybersecurity in the AI Era: Protecting Data in 2030 and Beyond

- 🛡️ Best Cybersecurity Tools for Freelance Developers in 2025 (Free + Paid)

- Why Every Developer Needs a Certified Ethical Hacker (CEH) Certification in 2025

Practical Developer Strategies in 2025

- Data Minimization Libraries

- Tools like Privado scan codebases for PII usage.

- Helps identify “silent leaks” where personal data is unintentionally logged.

- Consent Management SDKs

- OneTrust, Osano, and open-source CMPs integrate with web & mobile apps.

- Provide legally compliant consent banners without dark patterns.

- Differential Privacy APIs

- Apple’s iOS still uses differential privacy for keyboard suggestions.

- Developers can adopt similar strategies for anonymized analytics.

- Zero-knowledge encryption

- Services like ProtonMail prove data can be encrypted so that even providers can’t see it.

- Dev tip: integrate client-side encryption for sensitive fields.

- Privacy-focused Analytics

- Replace Google Analytics with Plausible, Umami, or Matomo.

- No cookies, GDPR compliant by design.

- User Data Portability APIs

- Allow users to export their data (CSV/JSON).

- Not only builds trust but meets regulatory requirements.

Data Lifecycle with Privacy by Design

(User Consent)

(Anonymization)

(Encryption)

(Access Control)

(Right to Erasure)

Real-World Example: Building a Privacy-First E-commerce App

Imagine you’re building an online store in 2025:

- Checkout analytics → Instead of recording full keystrokes, track only success/failure.

- Personalization → Instead of saving user browsing history, recommend products using on-device AI.

- Consent → Default all marketing toggles OFF, ask users explicitly.

- Logs → Store transaction IDs, not credit card info.

This isn’t hypothetical. Shopify Plus partners already recommend event-based, non-invasive analytics to e-commerce devs.

My Perspective as a Developer

When I first started coding, I didn’t think twice before adding Google Analytics, Hotjar, Mixpanel, and multiple trackers “just in case.” Looking back, that was reckless.

Today, I realize:

- Most apps don’t need 20 trackers.

- Privacy-first architecture scales better in the long run.

- Users appreciate when you don’t treat them like data mines.

Privacy by Design isn’t just ethical; it’s practical. It reduces attack surface, infrastructure bloat, and maintenance nightmares.

The Future of Privacy by Design (2025–2030)

- On-device AI → More personalization without sending raw data to servers.

- Federated learning → Training models across devices while keeping data local.

- Privacy as USP → Startups that lead with privacy (like Proton, Brave) will continue to grow.

- Global patchwork laws → Devs will need to build modular compliance, as U.S., EU, and Asia adopt different frameworks.

Final Checklist for Developers

✅ Collect only what you need

✅ Default to opt-in consent

✅ Use privacy-first analytics

✅ Encrypt everything, everywhere

✅ Provide user control and visibility

✅ Regularly audit your codebase for PII

✅ Stay updated on regulations

Conclusion

Privacy is not a blocker to innovation—it’s the pathway to sustainable software. Developers in 2025 can’t ignore ethical data collection; it’s the backbone of user trust, compliance, and competitive advantage.

Whether you’re building an MVP or scaling a global platform, privacy-first engineering is simply good engineering.

FAQs

Q1. What is ethical data collection in software development?

Ethical data collection means gathering only the information that users expect and consent to, while being transparent about its purpose. It goes beyond legal compliance (GDPR, CCPA, DPDP) and focuses on respecting user privacy through minimization, consent, security, and user control.

Q2. How does Privacy by Design (PbD) help developers in 2025?

Privacy by Design provides developers with seven key principles—such as proactive protection, privacy as the default, and end-to-end security—that help them integrate privacy into every stage of app architecture. This ensures compliance, builds trust, and reduces long-term maintenance and legal risks.

Q3. What are some practical tools for implementing privacy-first development?

Developers can use tools like Privado for PII detection, OneTrust or Osano for consent management, Plausible or Umami for privacy-focused analytics, and client-side encryption libraries for zero-knowledge data handling. These tools make privacy compliance more efficient and less error-prone.

Q4. Why should startups care about ethical data collection?

Startups benefit from leaner, privacy-first data strategies because they reduce infrastructure costs, simplify compliance, and lower breach risks. Companies like Figma grew rapidly while collecting minimal user data, proving that privacy-first approaches can be a growth driver rather than a limitation.

Q5. What’s the future of ethical data practices between 2025–2030?

We’ll see more on-device AI, federated learning, and modular compliance frameworks. Privacy will become a core product differentiator, and companies that embrace it—like Proton and Brave—will gain stronger user loyalty and competitive advantage.

🚀 Let's Build Something Amazing Together

Hi, I'm Abdul Rehman Khan, founder of Dev Tech Insights & Dark Tech Insights. I specialize in turning ideas into fast, scalable, and modern web solutions. From startups to enterprises, I've helped teams launch products that grow.

- ⚡ Frontend Development (HTML, CSS, JavaScript)

- 📱 MVP Development (from idea to launch)

- 📱 Mobile & Web Apps (React, Next.js, Node.js)

- 📊 Streamlit Dashboards & AI Tools

- 🔍 SEO & Web Performance Optimization

- 🛠️ Custom WordPress & Plugin Development