Developers vs AI-Generated Code: When Should You Trust the Machine More?

Table of Contents

The rise of AI-powered coding assistants like GitHub Copilot, ChatGPT, Tabnine, and Amazon CodeWhisperer has transformed how developers approach software development. From auto-completing functions to generating entire modules, AI promises speed, efficiency, and sometimes even creativity.

But here’s the burning question: Can developers really trust AI-generated code?

Need Fast Hosting? I Use Hostinger Business

This site runs on the Business Hosting Plan. It handles high traffic, includes NVMe storage, and makes my pages load instantly.

Get Up to 75% Off Hostinger →⚡ 30-Day Money-Back Guarantee

In 2025, the answer isn’t as black and white as “yes” or “no.” Instead, it lies somewhere in the middle — where human expertise meets machine intelligence. Let’s explore this complex relationship through data, real-world developer experiences, and critical analysis.

The Current State of AI in Coding

AI isn’t just writing snippets anymore; it’s writing end-to-end solutions. GitHub reported that 46% of all code written on their platform in 2025 involved some level of AI assistance. Similarly, JetBrains surveys revealed that more than 60% of developers now rely on AI tools for at least part of their workflow.

Developers are using AI for:

- Auto-completing repetitive syntax

- Generating boilerplate code

- Debugging and suggesting fixes

- Creating test cases

- Translating code between languages

- Writing documentation

Clearly, AI has become an integral teammate. Yet, the debate continues: is it a reliable teammate, or just a fast but careless intern?

Trust Issues: Why Developers Are Still Skeptical

Many developers hesitate to fully trust AI-generated code. Here are the main concerns:

- Accuracy and correctness

AI can generate solutions that look correct but are logically flawed. For example, ChatGPT might confidently suggest a sorting algorithm but miss edge cases. - Security risks

A 2024 Stanford study showed that AI-assisted developers were more likely to introduce security vulnerabilities than those coding manually. Common issues included SQL injections and weak input validation. - Maintainability

AI often writes “quick fix” solutions that don’t align with project architecture or long-term maintainability practices. - Bias and plagiarism

Since AI is trained on existing code, it sometimes reproduces copyrighted snippets or outdated practices.

As one developer on Reddit’s r/programming said:

“AI is like a junior dev who never sleeps. It can pump out code, but if you don’t review it carefully, you’ll end up with spaghetti that compiles but breaks your system later.”

If you want more details with visuals, then see the pdf link below(login required)

When Developers Can Trust AI

Despite concerns, there are scenarios where AI shines. Developers across GitHub, Stack Overflow, and Hacker News shared situations where AI tools significantly reduced workload without sacrificing quality.

1. Repetitive or Boilerplate Code

Tasks like setting up REST API routes, generating CRUD operations, or writing unit test templates are perfect for AI. Instead of spending hours typing predictable patterns, developers can focus on refining logic.

Example: A full-stack dev in Berlin shared on Medium how Copilot generated 80% of boilerplate code for a React-Express project, saving two full days of work.

2. Documentation and Comments

AI excels at generating explanatory comments and inline documentation. While not always perfect, it provides a starting point that devs can refine.

3. Prototyping and Ideation

For hackathons and MVPs, speed often matters more than perfection. AI allows developers to test ideas quickly. A founder on Indie Hackers explained how AI helped them prototype a SaaS product in 48 hours — something that would have taken weeks otherwise.

4. Learning and Exploration

Beginners especially benefit from AI-generated code. Instead of staring at documentation, they can ask AI to generate examples, then study and tweak them.

When Developers Should NOT Trust AI

Blind trust in AI can be dangerous. Here are the moments when caution is essential:

1. Security-Critical Applications

If you’re building fintech software, healthcare apps, or authentication systems, AI suggestions need to be triple-checked. An AI-generated SQL query that lacks parameterized inputs can open the door to devastating breaches.

2. Large-Scale System Design

AI may provide snippets, but it lacks a holistic understanding of distributed systems, scalability, or enterprise-level architecture. This is where human judgment is irreplaceable.

3. Domain-Specific Expertise

AI tools are trained on general data. If you’re coding in a specialized domain (e.g., aerospace, cryptography, blockchain consensus), AI may hallucinate solutions that sound right but are dangerously wrong.

Useful Links

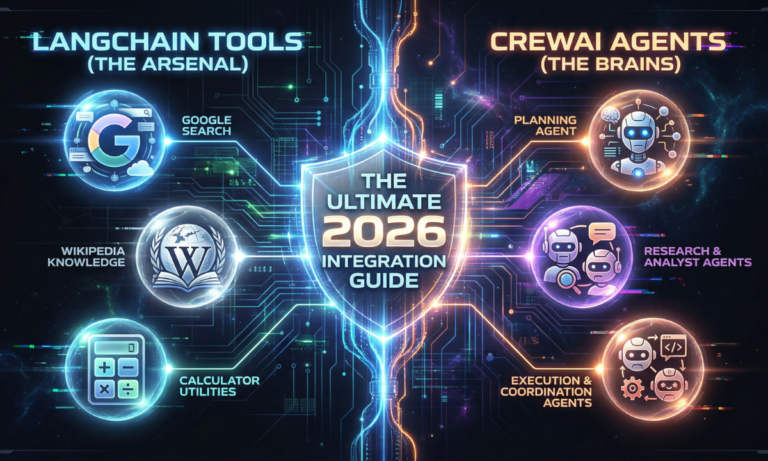

- The Era of Small Language Models (SLMs): Why 2026 Belongs to Edge AI

- Microsoft AutoGen vs. CrewAI: I Ran a “Code Battle” to See Who Wins in 2026

- How to Build AI Agents with LangChain and CrewAI (The Complete 2026 Guide)

- Beyond the Chatbot: Why 2026 is the Year of Agentic AI

- Why Developers Are Moving from ChatGPT to Local LLMs (2025)

- LangChain vs. LlamaIndex (2026): Which AI Framework Should You Choose?

4. Legal and Ethical Risks

Copy-paste AI code without attribution, and you might face copyright issues. OpenAI, GitHub, and others are still under scrutiny for training their models on public repositories without explicit permissions.

The Human-AI Partnership: A Balanced Approach

The future of coding is not developers vs AI; it’s developers with AI. The smartest devs are those who leverage AI while maintaining critical oversight.

Think of AI as:

- A productivity booster for repetitive tasks.

- A brainstorming partner for exploring new approaches.

- A code reviewer that sometimes spots issues humans miss.

But also remember:

- You are the architect. AI can’t design entire systems for long-term success.

- You are the reviewer. Every line of AI code needs a human’s eye before going into production.

As senior engineer Anna McNally put it on Hacker News:

“AI won’t replace developers. But developers who know how to use AI effectively will replace those who don’t.”

Real Experiences From Developers

To ground this debate, let’s look at real testimonials:

- James, Backend Dev, UK

“I use Copilot daily for writing tests. Honestly, it feels like cheating sometimes. But when I let it generate logic-heavy code, I end up rewriting 70% of it.” - Priya, Mobile Developer, India

“ChatGPT saved me in a client project. I had two hours to fix a Firebase auth bug, and AI gave me a solution in 5 minutes. But I wouldn’t trust it for payments or security.” - David, DevOps Engineer, US

“AI is great at writing Terraform configs and YAML. But it once suggested a wrong AWS IAM policy that would have opened my S3 bucket to the world. Luckily, I caught it in review.”

These stories prove one thing: AI is powerful, but risky when left unchecked.

The Future: Will AI Replace Developers?

This is the question that never dies. Will AI replace human programmers entirely?

The short answer: not anytime soon.

- AI can generate code, but it can’t replace problem-solving, creativity, and context awareness.

- Developers bring domain expertise, ethical reasoning, and long-term planning.

- AI is more likely to become a force multiplier, increasing developer productivity by 2x–5x.

In fact, McKinsey estimates that AI could add $2.6 trillion annually to the software industry by 2030. But humans will remain at the core.

Final Thoughts

So, when should you trust AI-generated code?

- Trust AI for boilerplate, documentation, prototyping, and learning.

- Don’t trust AI blindly for mission-critical, security-heavy, or domain-specific code.

- Always review, always refine.

In the end, the best developers are not those who reject AI, but those who know when to lean on it and when to take control themselves.

👉 And if you want daily updates and PDF resources, then subscribe to our newsletter.

FAQs

1. Is AI-generated code safe for production?

Yes, but only after a human reviews it thoroughly. AI can introduce subtle bugs or vulnerabilities.

2. Can AI-generated code pass coding interviews?

Sometimes. AI can solve LeetCode-style problems, but it lacks deeper problem-solving skills.

3. What’s the biggest risk of trusting AI-generated code?

Security vulnerabilities and hidden logic errors.

4. Will junior developers lose jobs to AI?

Not if they adapt. Juniors who use AI effectively will remain valuable.

5. Which AI coding assistant is best in 2025?

Copilot leads in IDE integration, ChatGPT excels at explanations, Tabnine is lightweight, and CodeWhisperer is strong for AWS users.

🚀 Let's Build Something Amazing Together

Hi, I'm Abdul Rehman Khan, founder of Dev Tech Insights & Dark Tech Insights. I specialize in turning ideas into fast, scalable, and modern web solutions. From startups to enterprises, I've helped teams launch products that grow.

- ⚡ Frontend Development (HTML, CSS, JavaScript)

- 📱 MVP Development (from idea to launch)

- 📱 Mobile & Web Apps (React, Next.js, Node.js)

- 📊 Streamlit Dashboards & AI Tools

- 🔍 SEO & Web Performance Optimization

- 🛠️ Custom WordPress & Plugin Development