The Ethics of AI Coding: Where Developers Must Draw the Line

Table of Contents

Introduction: When Code Shapes Society

Artificial Intelligence (AI) is no longer a futuristic buzzword — it’s deeply embedded in the way we live, work, and connect. From AI-powered hiring tools to healthcare diagnosis systems, and from language models like ChatGPT to self-driving vehicles, the code we write today has the power to shape the moral, social, and even political fabric of tomorrow.

But here’s the chilling truth: with great power comes great responsibility — and unfortunately, the race for speed and innovation often overshadows ethics in AI coding.

Need Fast Hosting? I Use Hostinger Business

This site runs on the Business Hosting Plan. It handles high traffic, includes NVMe storage, and makes my pages load instantly.

Get Up to 75% Off Hostinger →⚡ 30-Day Money-Back Guarantee

As a developer who has worked on AI-driven projects, I’ve seen firsthand how easily ethical red flags can be ignored in the excitement of creating “the next big thing.” The question isn’t whether we can build powerful AI systems — it’s whether we should, and how we ensure these systems are responsible, fair, and trustworthy.

Why AI Coding Ethics Can’t Be Ignored

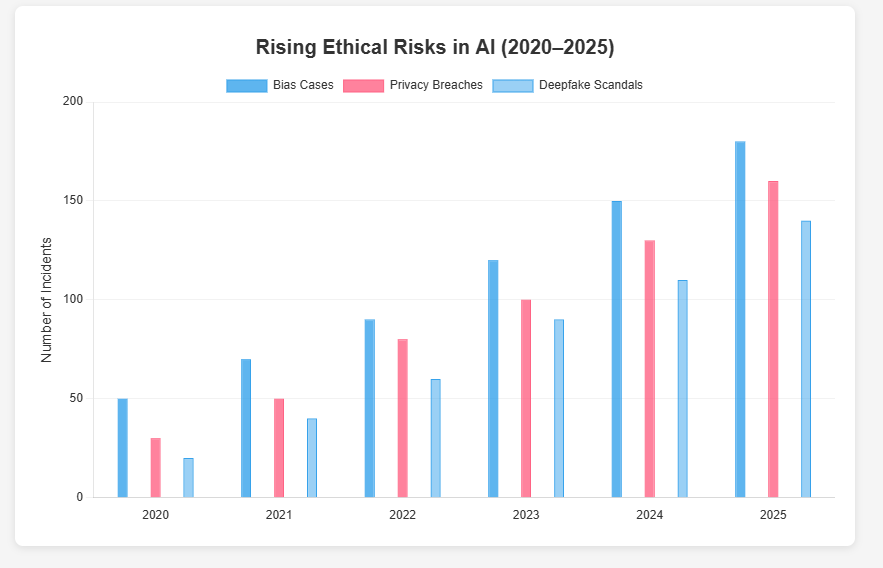

AI isn’t neutral. Every line of code, every dataset, and every prompt can carry bias, unintended consequences, or even malicious potential. For example:

- Biased datasets in recruitment AI that discriminate against women or minority groups.

- Surveillance AI that compromises privacy and civil liberties.

- Deepfake technology that spreads misinformation.

- Unregulated coding shortcuts that make models vulnerable to prompt injection or data poisoning.

From my own projects, I’ve seen how tempting it is to skip ethical review steps under client pressure or deadlines. But ignoring ethics doesn’t just risk public backlash — it undermines user trust and long-term credibility.

Core Ethical Challenges in AI Coding

1. Bias in Data and Algorithms

AI systems are only as good as the data we feed them. But what if that data reflects historical discrimination or incomplete perspectives?

- Example: Amazon had to scrap its AI hiring tool because it favored male candidates.

- Developer Responsibility: Actively audit and diversify datasets.

2. Transparency vs. Black Box Models

Many advanced models (like deep learning systems) are so complex that even developers struggle to explain how they arrive at decisions. This “black box” problem raises huge trust issues.

- Example: A patient denied insurance by an AI system has no way of knowing why.

- Solution: Integrate explainability tools like LIME or SHAP.

3. Data Privacy and Ownership

User data fuels most AI systems, but without clear consent and safeguards, privacy violations are inevitable.

- Example: Voice assistants recording private conversations.

- Action Point: Developers must ensure compliance with GDPR, HIPAA, and local data laws.

4. Autonomous Decision-Making

When AI systems act without human oversight, the consequences can be irreversible.

- Example: Self-driving cars facing ethical choices in crash scenarios.

- Developer Role: Always build human-in-the-loop systems for critical decisions.

5. Weaponization of AI

From deepfakes to automated cyberattacks, unethical AI coding can directly endanger global security.

- Developer Responsibility: Refuse to work on or contribute to unethical projects.

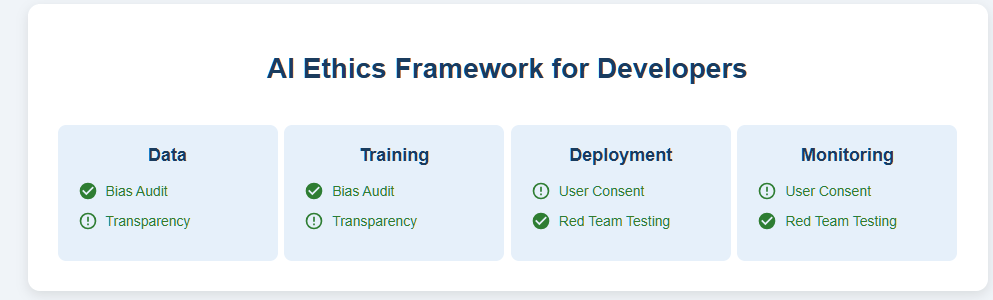

Framework for Responsible AI Coding

To make this actionable, here’s a step-by-step ethical coding framework every developer can apply:

- Dataset Verification: Ensure diversity and bias testing.

- Explainability Tools: Implement transparency in outputs.

- User Consent First: Always get explicit permission before data use.

- Ethical Review Board: At least one external review of project impact.

- Red Team Testing: Actively simulate misuse and attacks.

- Continuous Monitoring: Track model drift and ethical compliance.

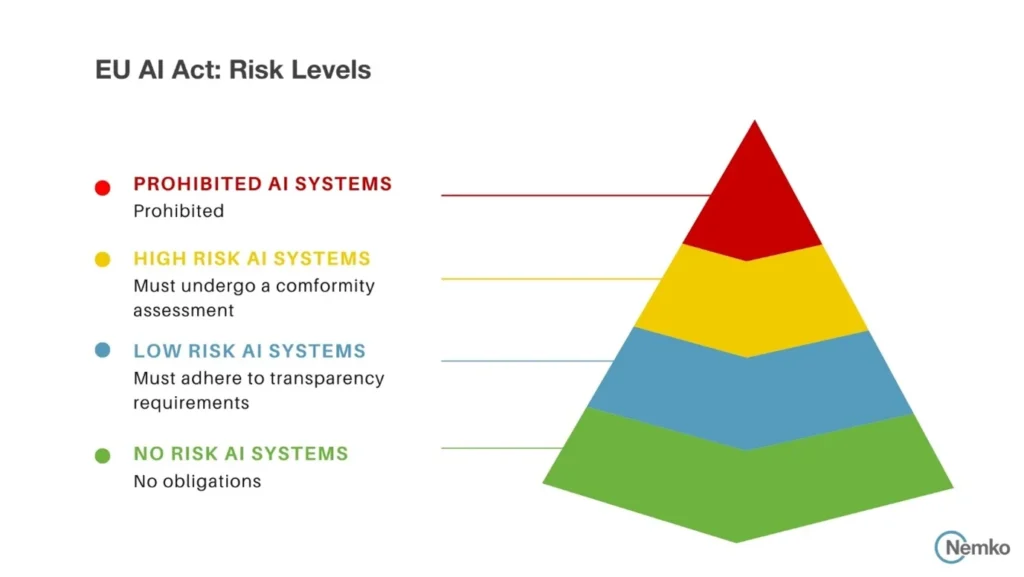

Regulatory Landscape Developers Must Know

- EU AI Act (2024): Categorizes AI systems into risk tiers.

- IEEE Ethically Aligned Design: Developer guidelines for responsible AI.

- NIST AI Risk Management Framework: Practical risk assessment tools.

Developers who ignore these frameworks risk not just bad PR but serious legal consequences.

The Trust Factor: Why Ethical AI Wins

Users today are smarter than ever. They can tell when a system is manipulative or unsafe.

Useful Links

- The Era of Small Language Models (SLMs): Why 2026 Belongs to Edge AI

- Microsoft AutoGen vs. CrewAI: I Ran a “Code Battle” to See Who Wins in 2026

- How to Build AI Agents with LangChain and CrewAI (The Complete 2026 Guide)

- Beyond the Chatbot: Why 2026 is the Year of Agentic AI

- Why Developers Are Moving from ChatGPT to Local LLMs (2025)

- LangChain vs. LlamaIndex (2026): Which AI Framework Should You Choose?

- A 2025 Gartner survey shows 72% of enterprises prefer ethical AI vendors over cheaper, opaque alternatives.

- Developers with an ethical reputation are increasingly preferred in hiring.

In short: ethics isn’t a burden — it’s a competitive advantage.

FAQs

Q1: How can developers detect bias in their AI systems?

Use fairness auditing tools like IBM AI Fairness 360 or Microsoft Fairlearn.

Q2: What’s the biggest ethical risk in AI coding today?

Biased decision-making in critical sectors like healthcare and finance.

Q3: Should developers refuse unethical projects?

Yes. Long-term reputation and legal safety outweigh short-term gain.

Q4: Are there global standards for ethical AI coding?

Yes — EU AI Act, IEEE guidelines, and NIST frameworks are key references.

Q5: How can small developer teams practice ethical AI without big budgets?

Use free open-source ethics toolkits, conduct peer reviews, and enforce human oversight.

Conclusion: Drawing the Line as Developers

AI coding ethics isn’t just about compliance — it’s about shaping a future we’d be proud to live in. The next time you’re writing a line of AI code, ask yourself:

“If my AI makes a mistake, who pays the price?”

That’s the line every developer must recognize — and never cross.

Author Box

Written by Abdul Rehman Khan, developer, blogger, and SEO expert passionate about building responsible tech. Sharing insights to help developers code with both power and principle.

🚀 Let's Build Something Amazing Together

Hi, I'm Abdul Rehman Khan, founder of Dev Tech Insights & Dark Tech Insights. I specialize in turning ideas into fast, scalable, and modern web solutions. From startups to enterprises, I've helped teams launch products that grow.

- ⚡ Frontend Development (HTML, CSS, JavaScript)

- 📱 MVP Development (from idea to launch)

- 📱 Mobile & Web Apps (React, Next.js, Node.js)

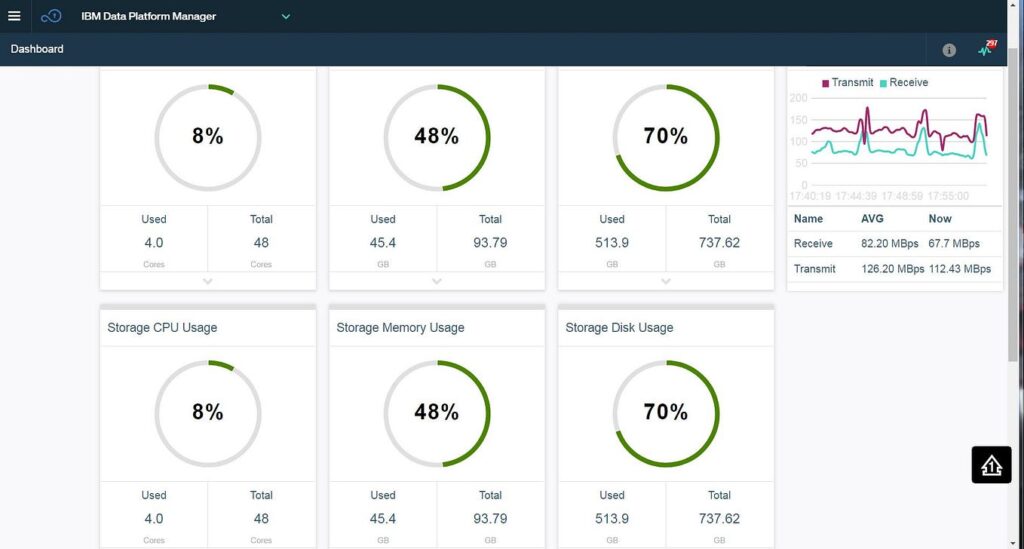

- 📊 Streamlit Dashboards & AI Tools

- 🔍 SEO & Web Performance Optimization

- 🛠️ Custom WordPress & Plugin Development