Cybersecurity for AI Workloads: Protecting ML Pipelines in 2025 and Beyond

Table of Contents

Introduction

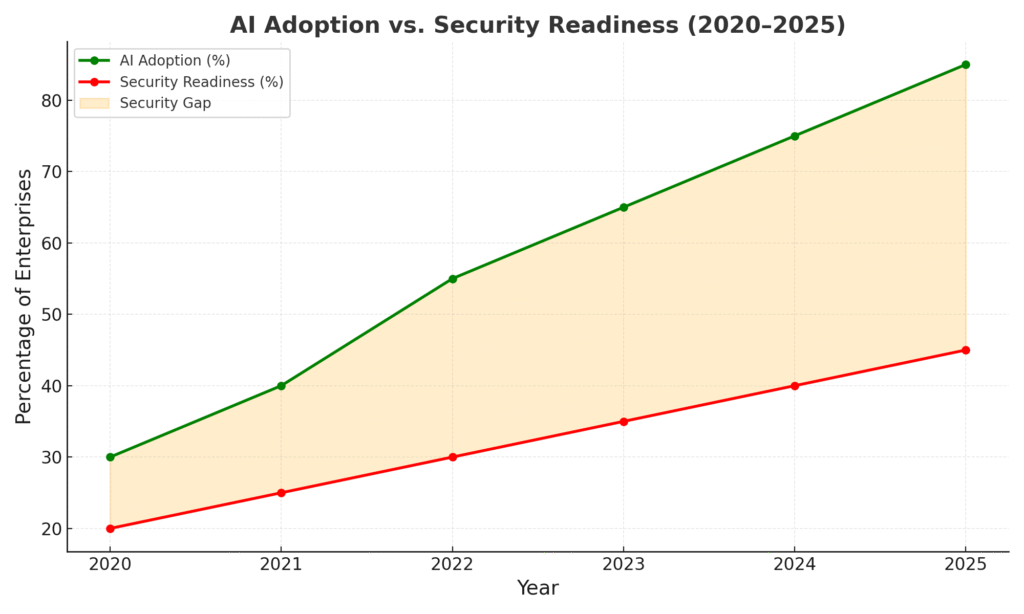

Artificial intelligence has crossed the line from innovation to infrastructure. By 2025, AI isn’t just powering recommendation engines — it’s driving autonomous vehicles, financial trading, healthcare diagnostics, and cybersecurity systems themselves. But the rise of AI also brings a dark side: an expanding attack surface that traditional IT security wasn’t designed to handle.AI adoption in enterprises has surged more in the last three years than in the entire previous decade. From advanced code generators to autonomous agents, the landscape is evolving rapidly with some of the biggest AI releases of 2025 reshaping development workflows.

From prompt injection attacks on large language models to data poisoning of machine learning pipelines, AI systems face threats that are both unique and evolving. Protecting these workloads is no longer optional — it’s a mission-critical priority.

Need Fast Hosting? I Use Hostinger Business

This site runs on the Business Hosting Plan. It handles high traffic, includes NVMe storage, and makes my pages load instantly.

Get Up to 75% Off Hostinger →⚡ 30-Day Money-Back Guarantee

This blog breaks down the risks, defenses, and frameworks you need to secure ML pipelines in 2025 and beyond.

Why AI Workload Security Is a Growing Crisis

The urgency is real. Reports show that:

- 80% of enterprises now use AI, but only 30% have AI-specific security frameworks.

- Data sprawl has returned with a vengeance — thanks to uncontrolled AI-generated content.

- Autonomous AI agents are increasingly targeted by attackers, with identity spoofing becoming a top risk.

- Nearly 85% of ML models never make it to production due to fragmented MLOps practices — leaving security gaps wide open.

AI systems don’t just process code — they learn from data. This makes them particularly vulnerable to adversarial manipulation and trust attacks.While AI adoption skyrockets, security practices have not kept pace. This is especially evident with the rise of decentralized intelligence — such as the rise of Edge AI in 2025 — where offline devices are now handling sensitive workloads without adequate protection.

Core Threats to ML Pipelines

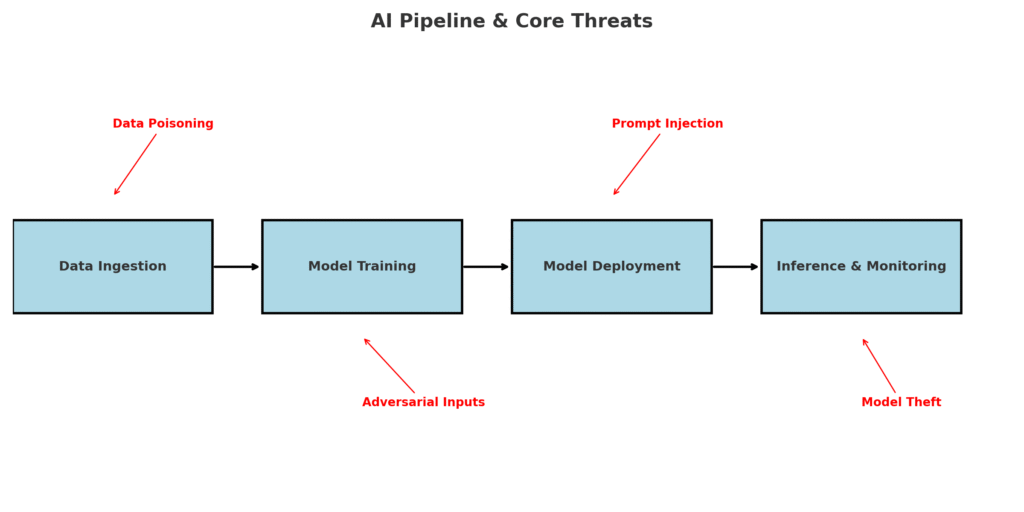

1. Data Poisoning

Attackers inject malicious data into training sets, altering how models behave without detection.

2. Adversarial Attacks

Specially crafted inputs trick models into misclassifying data — for example, fooling an AI-powered medical diagnosis tool.

3. Prompt Injection

Attackers sneak malicious instructions into user input or documents, hijacking large language models.

4. Supply Chain Attacks

Compromised open-source libraries or pretrained models can secretly leak data or execute hidden code.

5. Model Theft & Exfiltration

Hackers reverse-engineer or scrape inference APIs to steal intellectual property.

6. Shadow AI & Rogue Agents

Unauthorized AI models or “shadow apps” bypass governance, creating blind spots.

One of the fastest-growing risks in 2025 is prompt injection, where malicious actors manipulate inputs to make AI models behave unpredictably. Similar attacks are already seen in the wild, with criminals exploiting LLMs for phishing and scams — as detailed in our guide on how hackers are exploiting ChatGPT for scams.

Beyond prompt injection, model theft has become a real threat. Just as open-source maintainers face burnout and hidden risks, organizations are beginning to realize the dark side of open source projects when it comes to AI security.

Secure by Design: Building AI Systems Safely

Security must begin before a single line of model code is written.

- Threat Modeling Early: Identify how data, models, and APIs could be exploited.

- Least Privilege Access: Give every agent and process only the permissions it needs.

- Security Posture as Code: Automate policies for drift detection and compliance.

- Auditable Pipelines: Treat every dataset and model version as a signed artifact.

This “shift-left” approach prevents vulnerabilities from becoming production disasters.

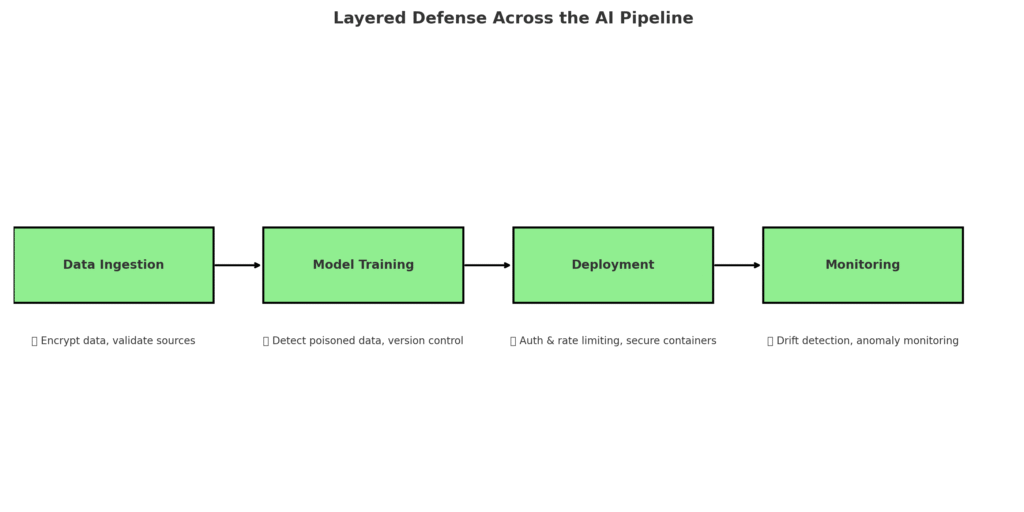

Layered Defense: Protecting Each Pipeline Stage

Data Ingestion

- Validate all external data.

- Encrypt in transit and at rest.

- Verify dataset provenance to avoid hidden bias or poisoning.

Model Training

- Use anomaly detection to identify poisoned samples.

- Keep immutable logs of training sessions.

- Test for adversarial robustness before deployment.

Deployment & Serving

- Protect inference APIs with authentication and rate limits.

- Deploy in secure containerized environments.

- Monitor runtime behavior for unusual spikes.

Continuous Monitoring

- Watch for model drift that could indicate attacks.

- Log and audit every prediction request.

Securing deployment requires strict authentication, sandboxing, and real-time anomaly detection. Many developers are already using the best cybersecurity tools in 2025 to build stronger defenses without breaking their budgets.

Identity & Agent Security for Autonomous Models

With AI agents making real decisions, identity is non-negotiable.

- Assign each agent a unique digital identity.

- Enforce multi-factor authentication for critical actions.

- Limit access through scoped permissions.

- Use sandbox environments to test agent behavior before granting production access.

Without identity safeguards, rogue agents can act autonomously with catastrophic consequences.

As AI agents grow more autonomous, ensuring trust becomes critical. The shift toward Agentic AI means developers must think about Zero Trust architectures for not just apps, but the models themselves.

Infrastructure-Level Protections & Segmentation

AI workloads often span multiple clouds and edge systems — meaning one weak link could compromise everything.

Useful Links

- Forget Selenium: Building AI Agents with browser-use & DeepSeek (The New 2026 Standard)

- The End of Localhost? Why Cloud Dev Environments (CDEs) Are Taking Over

- AI-Native Grads vs. Traditional Developers: The Talent War CEOs Are Betting On

- Google’s New Ranking Factor Is Quietly Killing Small Developer Blogs (2025 Guide)

- 📛 What Developers Should Stop Doing in 2025 (And What to Do Instead)

- Analytics Tools for Developers That Go Beyond Google (2025 Guide)

- Deploy Zero Trust segmentation across data, training, and inference layers.

- Use microsegmentation to limit lateral movement.

- Continuously scan container images for vulnerabilities.

- Monitor API traffic for anomalies in both request frequency and content.

Continuous Monitoring, Drift Detection & Zero Trust

AI pipelines evolve with data — and so do their vulnerabilities.

- Model Drift Detection: Alerts you when a model starts behaving differently from expected patterns.

- Zero Trust: Never allow implicit trust between systems. Each request, even internal, must be authenticated.

- Immutable Backups: Keep signed backups of every major model version for fast rollbacks.

Accuracy isn’t static — models naturally degrade over time. When drift is detected, it’s crucial to act fast. Developers can borrow lessons from frontend engineering, where observability tools for real user monitoring have already proven essential in catching subtle changes before they become disasters.

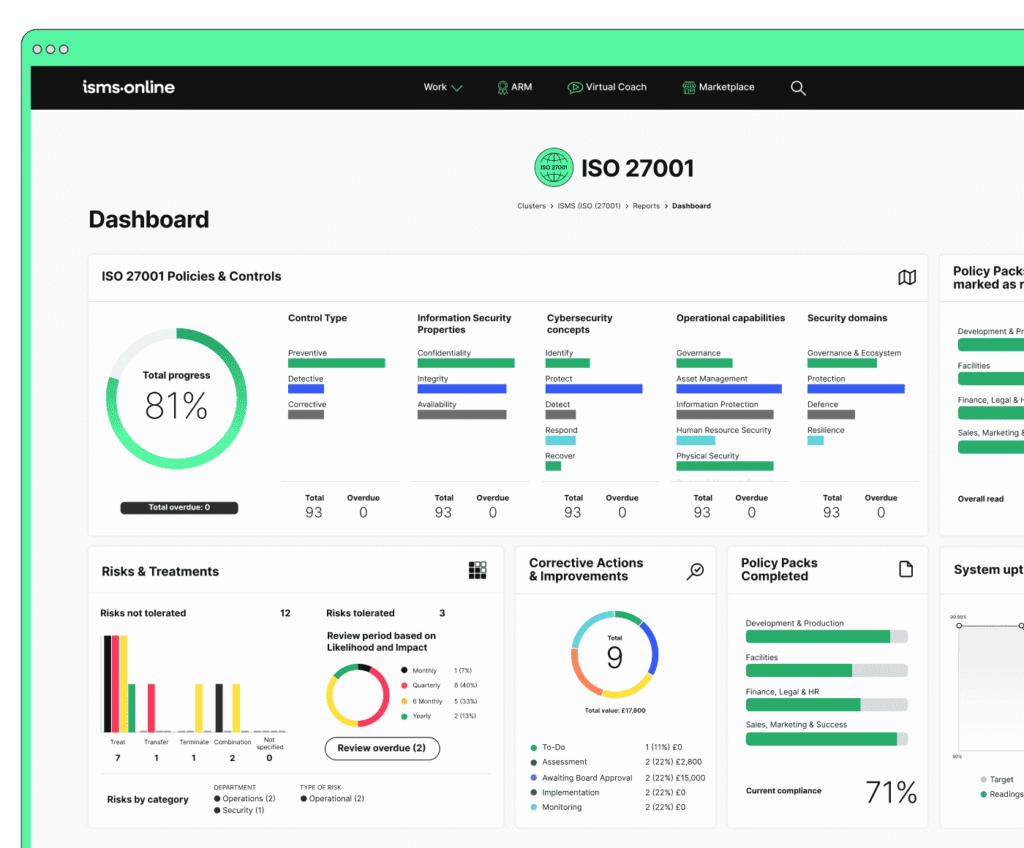

Compliance, Trust, and Auditability with ZK Techniques

For industries like healthcare and finance, compliance is not optional.

- Zero-Knowledge Proofs (ZKPs): Prove integrity of AI computations without exposing sensitive data.

- Signed Audit Trails: Document every data and model update.

- Transparent Testing: Publish security test reports to build stakeholder trust.

Compliance isn’t just about checklists anymore; it’s about proving you can be trusted in a landscape where Google’s rules evolve constantly. In fact, Google’s AI updates are reshaping compliance and SEO in 2025 — a signal of how quickly organizations need to adapt.

Building Trust: Real-World Practices & E‑E‑A‑T Signals

- Use MITRE ATLAS and NIST AI RMF as trusted security frameworks.

- Cite peer-reviewed research and respected cybersecurity advisories.

- Share case studies of AI security breaches and lessons learned.

- Disclose author expertise and experience to demonstrate credibility.

Common Misconceptions and Emerging Risks

❌ “AI is just software, so normal security works.”

Not true — AI pipelines are probabilistic, dynamic, and uniquelly vulnerable.

❌ “Adding more data makes models safer.”

More data often means more attack vectors if not governed properly.

❌ “Compliance can wait until we scale.”

Delaying compliance invites fines, lawsuits, and reputational damage.

FAQs

Q: What is the biggest threat to AI systems today?

Prompt injection attacks and data poisoning are currently top risks.

Q: How do I secure my ML pipeline as a small startup?

Start with secured environments, hardened APIs, and anomaly monitoring. Scale security in parallel with growth.

Q: Can Zero Trust actually work for AI workloads?

Yes — many enterprises now use Zero Trust architectures to secure both DevOps and MLOps pipelines.

Q: Why do most ML models never reach production?

Often due to fragmented DevOps-MLOps integration, leaving both inefficiencies and security holes.

Conclusion and Next Steps

The future of AI depends not just on innovation, but on trust. If pipelines can’t be secured, enterprises won’t risk deploying them.

🔑 Action Steps for 2025:

- Threat model your AI systems today.

- Enforce Zero Trust across every stage of MLOps.

- Deploy drift detection to catch silent attacks.

- Use signed audit trails and compliance frameworks.

- Educate teams about emerging risks like prompt injection.

AI’s potential is limitless — but only if we secure it.

The organizations that win in this new era will be those that treat security not as a final step, but as a design principle. If you want to build resilience into your stack and ensure visibility, check out our programmatic SEO strategies for 2025 — they share the same mindset of automation, monitoring, and proactive protection.

👤 Author Box

Written by Abdul Rehman Khan

Founder of Dev Tech Insights and Dark Tech Insights, A R Khan is a tech blogger, programmer, and SEO strategist passionate about the intersection of AI, cybersecurity, and developer tools. With years of experience analyzing cutting-edge trends,Abdul Rehman Khan’s goal is to deliver trustworthy, actionable insights that help developers and enterprises thrive securely in the age of AI.

If you want dark and unfiltered version of this blog,Visit

🚀 Let's Build Something Amazing Together

Hi, I'm Abdul Rehman Khan, founder of Dev Tech Insights & Dark Tech Insights. I specialize in turning ideas into fast, scalable, and modern web solutions. From startups to enterprises, I've helped teams launch products that grow.

- ⚡ Frontend Development (HTML, CSS, JavaScript)

- 📱 MVP Development (from idea to launch)

- 📱 Mobile & Web Apps (React, Next.js, Node.js)

- 📊 Streamlit Dashboards & AI Tools

- 🔍 SEO & Web Performance Optimization

- 🛠️ Custom WordPress & Plugin Development

One comment

Leave a Reply

You must be logged in to post a comment.

[…] Cybersecurity for AI Workloads: Protecting ML Pipelines in 2025 and Beyond […]